In this blog we present the model Bing uses to successfully maintain and continuously improve site performance. The model is comprised of components spanning data and tools, a dedicated performance team, processes, and organizational commitment. While each component will be familiar to most people, it’s their synthesis into a unified model that we hope teams across the industry will find useful as a template for their own performance missions.

The Bing Model for Driving Performance

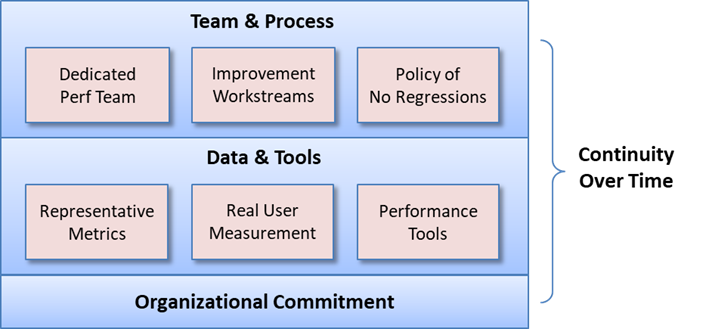

A prior blog discussed the architecture and many of the technical performance techniques used by the Bing search engine to deliver world-class performance to end users. But architecture and performance techniques alone are not sufficient to ensure fast performance.At Bing we employ a set of necessary and sufficient components that have proven successful in the mission to maintain and continuously improve site performance. The model is shown below.

Figure 1: Bing Model for Driving Performance

Necessary and sufficient means that each component of the model is necessary, and collectively they are sufficient to drive a successful performance mission. Additionally, each component must be executed with a high standard of excellence. A component that is either missing or poorly executed will undermine the mission.

This model has been developed over time through experience and learnings, both at Bing and other divisions at Microsoft. The following sections discuss each component of the model.

Organizational Commitment

Strong organizational commitment to performance is the foundation and its importance cannot be overstated because all other components of the model depend on it. At Bing there is an unwavering commitment to performance at the highest levels in the organization. For example:- Performance doesn’t need to be justified; it’s known to be good for users and good for business.

- Performance isn’t traded off against features. There is a win-win attitude of delivering both new features and performance and, importantly, this is reflected in ship criteria that prevent regressions.

- Significant resources are allocated to performance.

An anti-pattern to watch out for in your own organizations is lip service to performance. The importance may be understood but isn’t backed up by treating performance as a top priority when it comes to resource allocation, ship criteria, or architectural decisions.

Data & Tools

Excellent quantitative data and tools for developers are critical. At Bing the performance team discussed later is responsible for providing solutions in this space.Representative Metrics

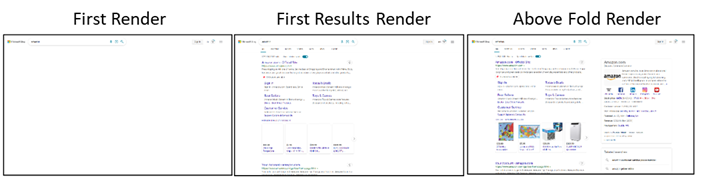

Fast performance is in service of desirable business outcomes such as user satisfaction, loyalty, and engagement. Thus, it’s important to have performance metrics representing end user perceived performance, such that an improvement to performance metrics can be assumed to have a beneficial impact on business metrics.Which performance metrics to use is dependent on the specific experience being measured and what makes users perceive it to be fast. Many metrics are used at Bing depending on the scenario. For the main search results page, we primarily focus on three metrics representing rendering performance: First Render, First Results Render, and Above Fold Render, which correspond to the main rendering phases of the experience.

Figure 2: Bing Rendering Sequence in Slow Motion for Main Search Results Page

Real User Measurement

Collecting performance metrics from real users is called RUM, or Real User Monitoring. Measuring performance in the wild is essential as it represents the “truth” across the myriad of different conditions (network speeds, device speeds, browsers, etc.) in the real user population. At Bing RUM data is used during the A/B Experimentation and Live Site phases as discussed in the next section.Performance is analyzed and tracked across the full distribution of percentiles, but the 90th percentile is primarily used for detailed tracking, goal setting, and accountability for end user performance.

Performance Tools

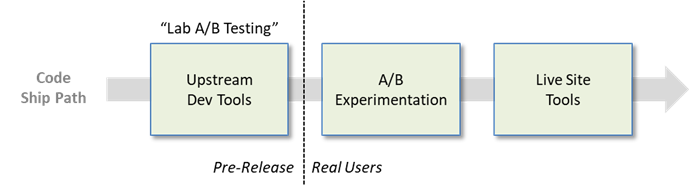

Bing uses performance tools at each stage in the ship pipeline as shown in the diagram below. These tools are designed to be used by any engineer in Bing, not just the performance team engineers.

Figure 3: Performance Tools

Upstream Dev Tools

The Bing performance team operates a system called the Performance Analyzer Service (PAS) which runs in a highly controlled environment of identical machines and is used to measure the performance of front-end code. PAS runs tests against URLs (or scripts) using real browsers in so-called test agents running on the machines. Tests generate the perceived performance metrics discussed earlier, along with a wealth of other useful metrics and diagnostics for performance debugging.Under the hood, PAS leverages WebPageTest open source which is an outstanding system for measuring web page performance. PAS uses layered services and a database to implement extensions to WebPageTest.

PAS has implemented built-in support for executing multiple tests together as a group in support of “Lab A/B Testing.” Just as developers test the performance impact of a code change in the real-world using A/B experimentation (see next section), they use Lab A/B testing to test the perf impact in a controlled lab environment during the development cycle.

PAS also has implemented Web Page Replay (WPR), which is a mechanism to eliminate test results variance due to servers, CDN, and networking when testing front-end code. It works by running a pre-test (called the Recording) through a WPR service which is essentially a web proxy that captures and stores all HTTP request/response information. Then, the actual test (called the Playback) is run against the WPR service with all requests served by it using the stored responses from the Recording. PAS is built such that a test agent and its WPR service are collocated, so that all HTTP traffic during a Playback is local to a single host; this further reduces variance.

Because of WPR and other tactics to reduce variance such as disabling background services and non-deterministic browser heuristics on the test agents, PAS provides repeatable test results with high sensitivity in detecting the front-end perf impact of a code change. PAS has proven to be very popular with developers in Bing and other divisions at Microsoft.

A/B Experimentation

Bing uses Microsoft’s internal A/B testing platform to evaluate performance in the real world prior to shipping. A/B experimentation is used both for evaluating the benefit of a potential perf optimization and for ensuring that standard feature enhancements don’t regress performance. An A/B experiment’s scorecard includes all relevant performance metrics that enable ship decisions after an experiment has completed (see more in the Enforced No Regressions section).The Bing performance team operates a pre-experiment gate that acts as a performance sanity check. It is implemented using the PAS system described in the prior section. The gate measures the control and treatments of an experiment to ensure the treatments’ performance are within a margin of expectations. This prevents egregiously performing code from being inflicted on real users during experiments. An additional guard is that after an experiment starts, it can trigger performance alerts if perf has regressed beyond a threshold and will automatically be shut down if severe enough.

Live Site Tools

Despite best efforts to prevent performance regressions due to code ships, it sometimes happens. Or a regression can occur due to other causes such as a networking infrastructure problem, capacity issue, or feature promotion on the site that is outside the control of engineering. To detect and quickly identify the root cause of a regression, Bing uses various tools.Bing uses typical time-series dashboards and reports to track real world performance over time, including drill-down into different dimensions and percentiles. There are flavors of these dashboards based on pre-cooked daily aggregations (unsampled) as well as NRT (near real time). Additionally, a data store with sampled data is used for deeper debugging across a larger set of dimensions; using sampled data allows for faster queries and visualization than is possible with the full data set.

Because Bing has a vast amount of performance data collected from different sources, it’s a challenge to automatically detect regressions. Unlike availability which can be measured against a fixed threshold like 99.99%, performance metrics can’t be measured using fixed thresholds because the expected performance varies depending on dimensions such as country, device class, browser, or seasonality in the data (end user performance varies across time-of-day and day-of-week due to a different mix of users on faster enterprise networks versus slower home networks).

To automatically detect and alert on regressions, Bing uses an Anomaly Detection tool. Anomaly detection efficiently checks a vast number of dimension combinations to quickly identify unusual patterns or deviations in metrics, such as spikes or steps in latency or traffic. Anomaly detection allows engineers to identify and isolate issues to specific areas of the system, rather than having to search through a vast amount of data.

Team & Process

Team and process components are the next part of the model. With a foundation of strong organizational commitment and excellent data and tools, Bing uses a centralized performance team and a couple of key processes to round out its performance mission.Dedicated Performance Team

Bing has a dedicated performance team whose responsibility is to deliver and maintain the data and tools components described above and to oversee the processes described in the following two sections.The Bing performance team provides expert guidance, analysis, driving, training, and data and tools that democratizes the performance mission – i.e., enables all feature developers to write and ship optimal code. For optimizing existing code, it will sometimes be done by an engineer from the performance team, but to achieve scale, frequently the performance team will identify opportunities and then partner with other teams, so that many optimizations are implemented by feature developers.

Improvement Workstreams

The Bing performance team drives workstreams whose goal is to improve performance. A backlog is maintained that is populated with opportunities, usually deriving from data analysis of RUM data, lab analysis using the PAS tool, or the emergence of new web technologies (see prior blog for examples of optimizations that have come from these workstreams).For end user performance, the 90th percentile of worldwide performance is the primary focus, but specialized workstreams also are spun up as needed (e.g., to focus on a particular geography, to focus on higher percentiles, etc.). As mentioned above, these workstreams are typically executed in partnership with other teams to scale the optimization efforts.

Given that Bing is a large organization with many teams and experiences, it’s not feasible for the performance team to drive all improvement workstreams. In some cases, they will be driven by feature teams with the performance team in a supporting role.

To add accountability, formal goals are set and tracked on a regular basis to ensure that perf improvement targets are being met. Without accountability, even the best-intentioned process is susceptible to failure.

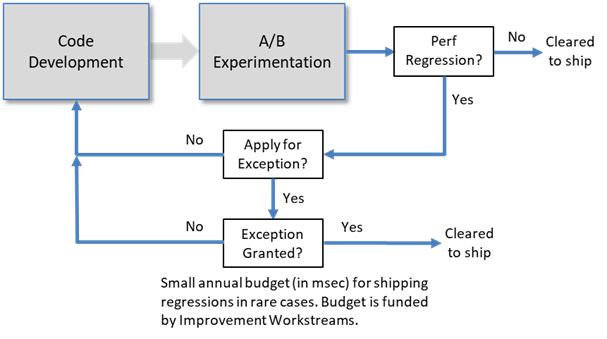

Policy of No Regressions

Enforcing a policy of No Regressions is super important to the performance mission. It’s all too easy to tradeoff performance against new features, especially if a business metric is shown to improve due to a new feature during A/B experimentation. But this is a losing game, as over time performance will inevitably degrade.Because of the strong organizational commitment to performance discussed earlier, Bing has been able to implement a process that prevents shipping perf regressions. New features must be at least performance-neutral, and Bing uses a formal sign-off process (tooling + humans) to ensure perf regressions don’t ship. This forces teams to optimize performance of their features, and in some cases find other code to optimize to stay neutral.

Figure 4: Process for Preventing Performance Regressions

An exception mechanism exists so that in rare cases a perf regression can ship; this requires VP approval and must fit within a small annual budget (in msec) for regressions. To counteract the effect of these regressions, workstreams exist to improve performance as described above, which has the effect of funding the regression budget plus a surplus so that Bing is getting net faster.

Like the perf improvement process, formal goals are set and tracked to ensure accountability to the policy of no regressions.

Continuity Over Time

Finally, continuity over time is essential. In other words, as an organization morphs due to turnover or new people in leadership positions, it’s important to keep the model’s execution intact.Fortunately, this hasn’t been a problem at Bing, and we’ve been able to improve the model’s execution over time. But we’ve seen cases in other divisions where a large reorganization led to a dramatic loss of institutional knowledge and a significant setback in the performance mission. The best way to overcome such situations is to ensure knowledge transfer to the new people, or even better have the model institutionalized company-wide, or at least across a higher level in the organization.

- Paul Roy, Jason Yu, and the Bing Performance Team