This blog post introduces recent updates to Bing that are making use of the Microsoft Turing Natural Language Generation (T-NLG) model, as well as expanding the use of the Microsoft Turing Natural Language Representation (T-NLR) model in production. If you are interested in trying out the Turing NLR model for your own business scenarios you can sign up for our preview.

Bringing Natural Language Generation into Bing

In May, we outlined the use of AI models fine-tuned from the Turing Natural Language Representation model in different parts of Bing’s question answering and ranking systems. In the last year, there has been very exciting progress on Natural Language Generation, including training our very own T-NLG model, and the introduction of OpenAI’s GPT-3, trained with Microsoft Azure. Today we are excited to share how these advancements are enabling us to build better search experiences.

Improving Autosuggest using Turing-NLG Next Phrase Prediction

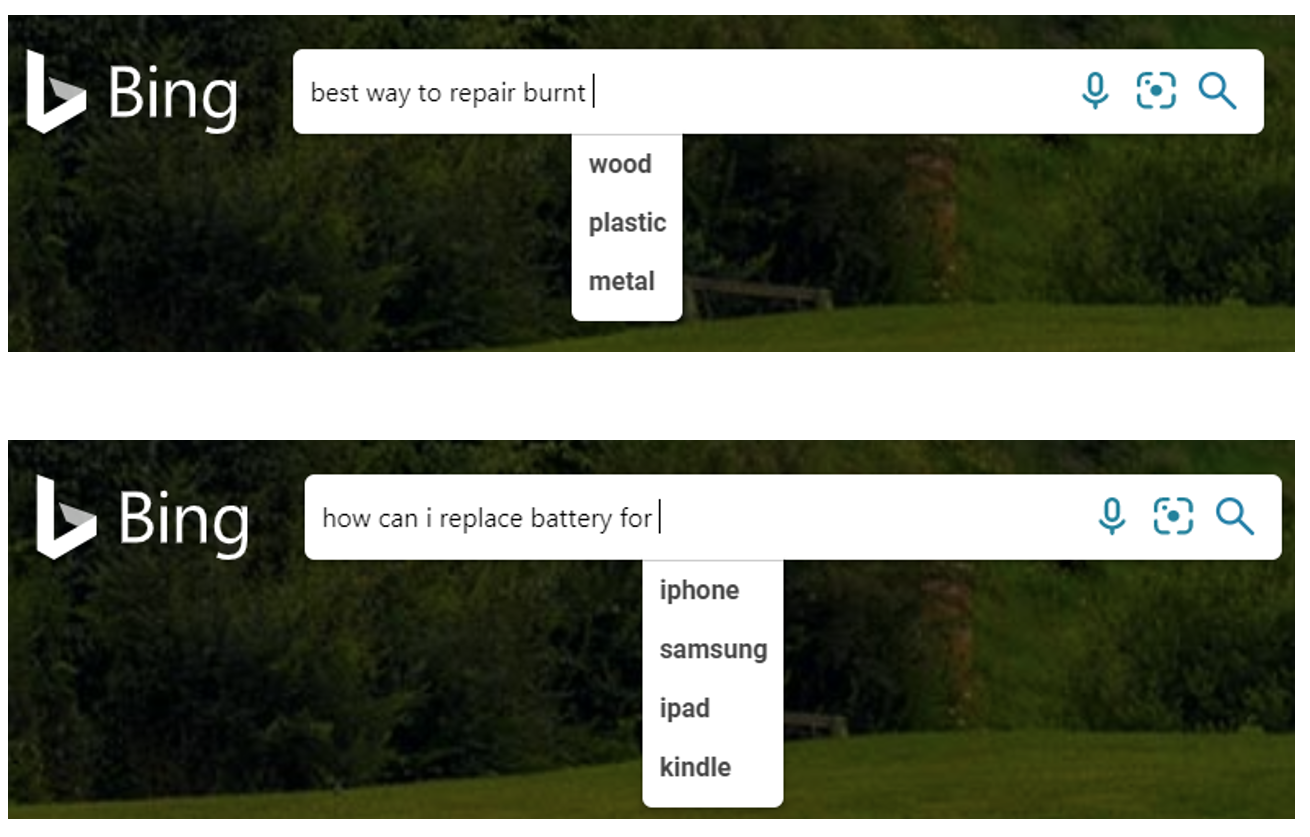

Autosuggest (AS) improves the search experience by suggesting the most relevant completed queries matching the partial query entered by the user. The key objective of AS is to save users time and keystrokes by offering better and faster query completion. The most frequent search prefixes are usually handled by suggestions that leverage previous queries issued by Bing users. However, as the user types more specific search terms (longer queries), the ability to generate suggestions on the fly becomes essential to overcome limitations of approaches based on previous queries.

Next Phrase Prediction is an AS feature that provides full phrase suggestions in real time for long queries. Before the introduction of Next Phrase Prediction, the approach for handling query suggestions for longer queries was limited to completing the current word being typed by the user. With Next Phrase Prediction, full phrase suggestions can be presented. Furthermore, because these suggestions are generated in real time, they are not limited to previously seen data or just the current word being typed. As a result, the coverage of AS completions increases considerably, improving the overall user experience significantly. The generative models are particularly challenging from an inferencing perspective due to – size of models and number of inferences required per query (one has to ideally run model inference per keystroke as the user is typing the query). We have introduced several innovations with respect to model compression, state caching, and hardware acceleration of these models to help delight our users cost efficiently.

Here are some examples that have suggestions that didn't before:

Better SERP exploration via Generative Questions in People Also Ask

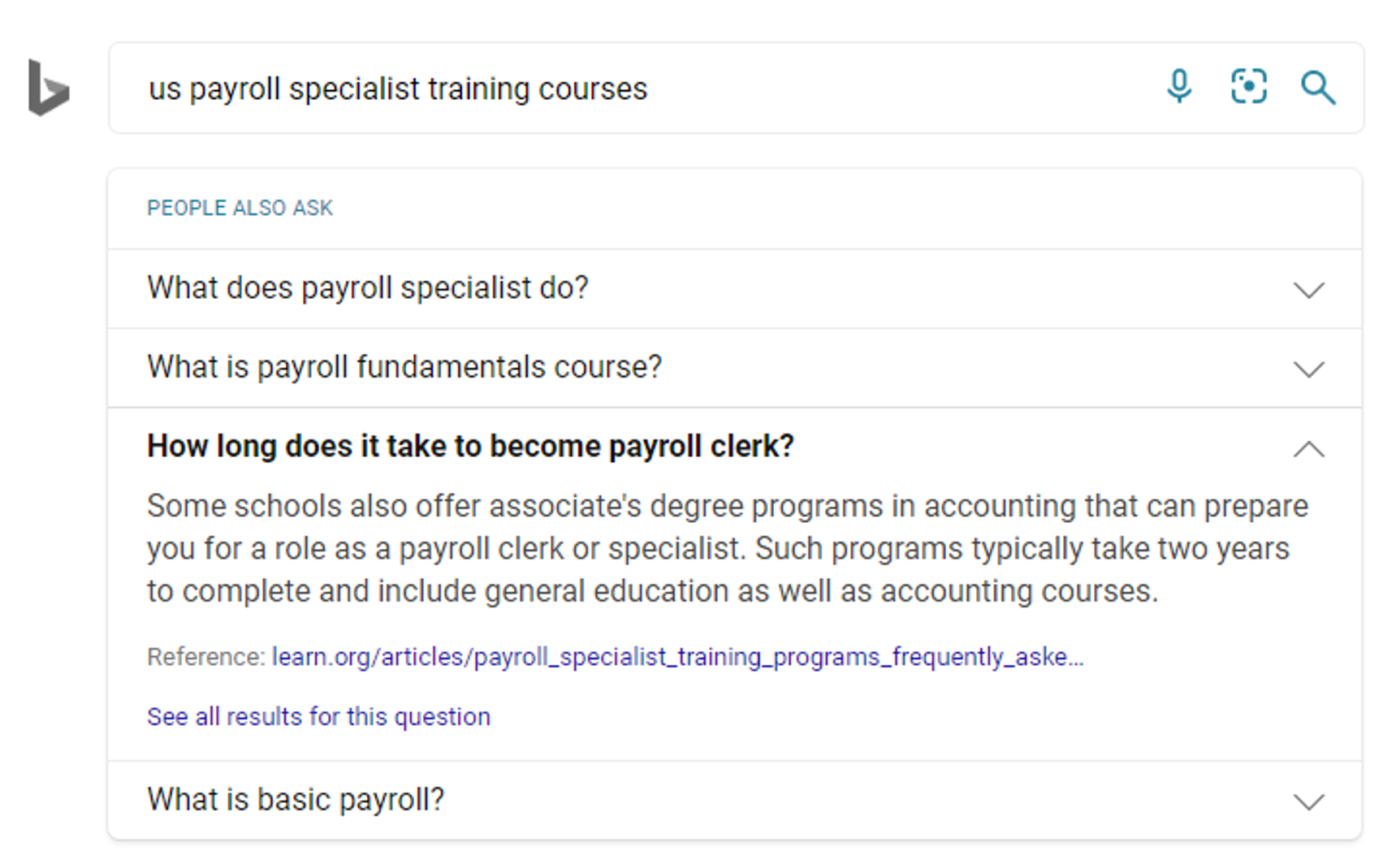

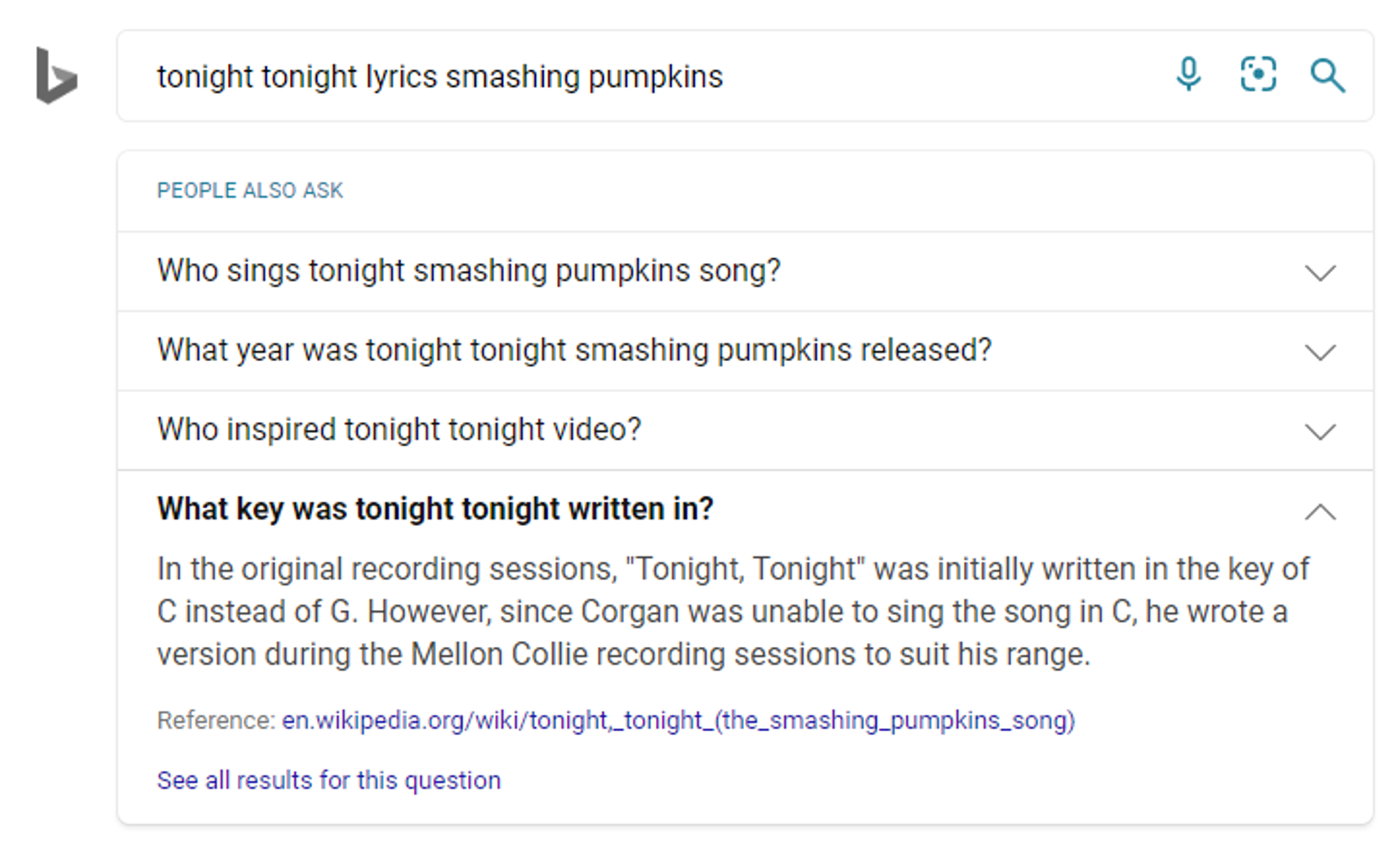

People Also Ask (PAA) is an extremely useful feature that allows users to expand the scope of their search by exploring answers to questions related to their original query. This saves users time and effort by not having to reformulate their questions to better fit their intent, while also enabling the exploration of adjacent intents. Since Bing has answers to a lot of user questions, it is natural and easy to find existing questions that are like the one that the user typed originally.

However, sometimes users ask questions for which we do not have previously asked questions and answers for similar intents. In cases like these, we need the ability to generate useful question-answer pairs from scratch in order to populate the PAA block.

We use a high-quality generative model on billions of documents to generate question-answer pairs that are present within those documents. Later, when the same documents appear on the Search Engine Result Page (SERP), we use the previously generated question-answer pairs to help populate the PAA block, in addition to existing similar questions that have previously been asked. This feature allows users to explore the entire SERP by asking more questions rather than simply browsing documents.

Here are a few examples of how this shows up on Bing (questions in green boxes are powered by question generation model):

Improving search quality globally with Natural Language Representation

Bing is available in over 100 languages and 200 regions. Although much of the latest AI research has been focused on English languages, we believe it is incredibly important to build AI technology that is inclusive of everyone. That is why we released our XGLUE dataset, which covers 19 languages over real production tasks, so that the research community could participate in our journey. We also shared our latest cross-lingual innovation InfoXLM, which is incorporated into the Turing Universal Language Representation (T-ULR) model. We’re excited to share how building on top of this technology has improved search experience for all users, speaking any language and located in any region of the world.

Expanding our intelligent answers to 100 languages and 200+ regions

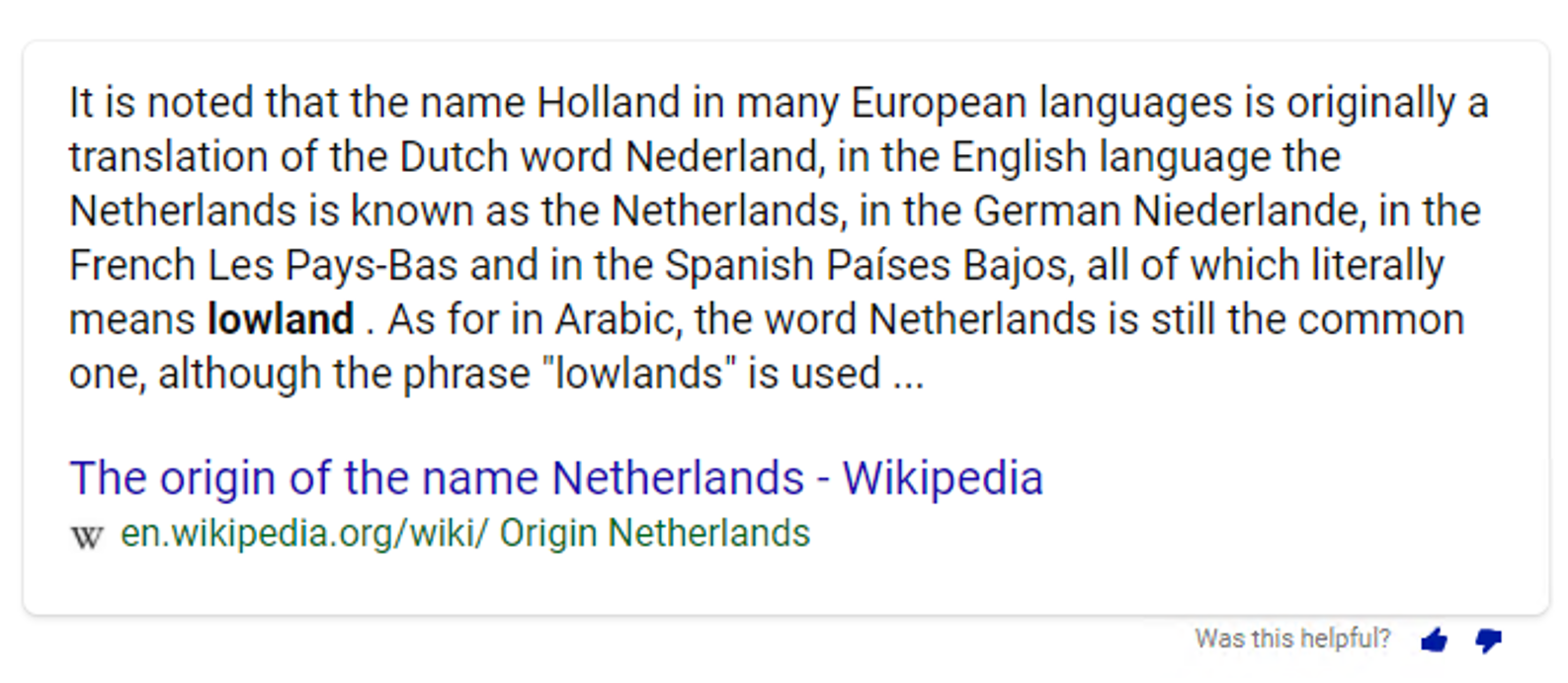

In our May blog post, we talked about how we took a zero-shot approach with our T-ULR (Turing Universal Language Representation) model to expand intelligent answers beyond English-speaking markets. We initially shipped those answers to 13 markets using a single model without any training data in those markets.

We are very happy to announce that since then, we have expanded our intelligent answers to over 100 languages and 200 regions. Here are a few translated examples of foreign language queries that are generating answers from a web search result, using the same model that does so in English markets.

Query: {meaning of the name Holland}

Original:

Translated:

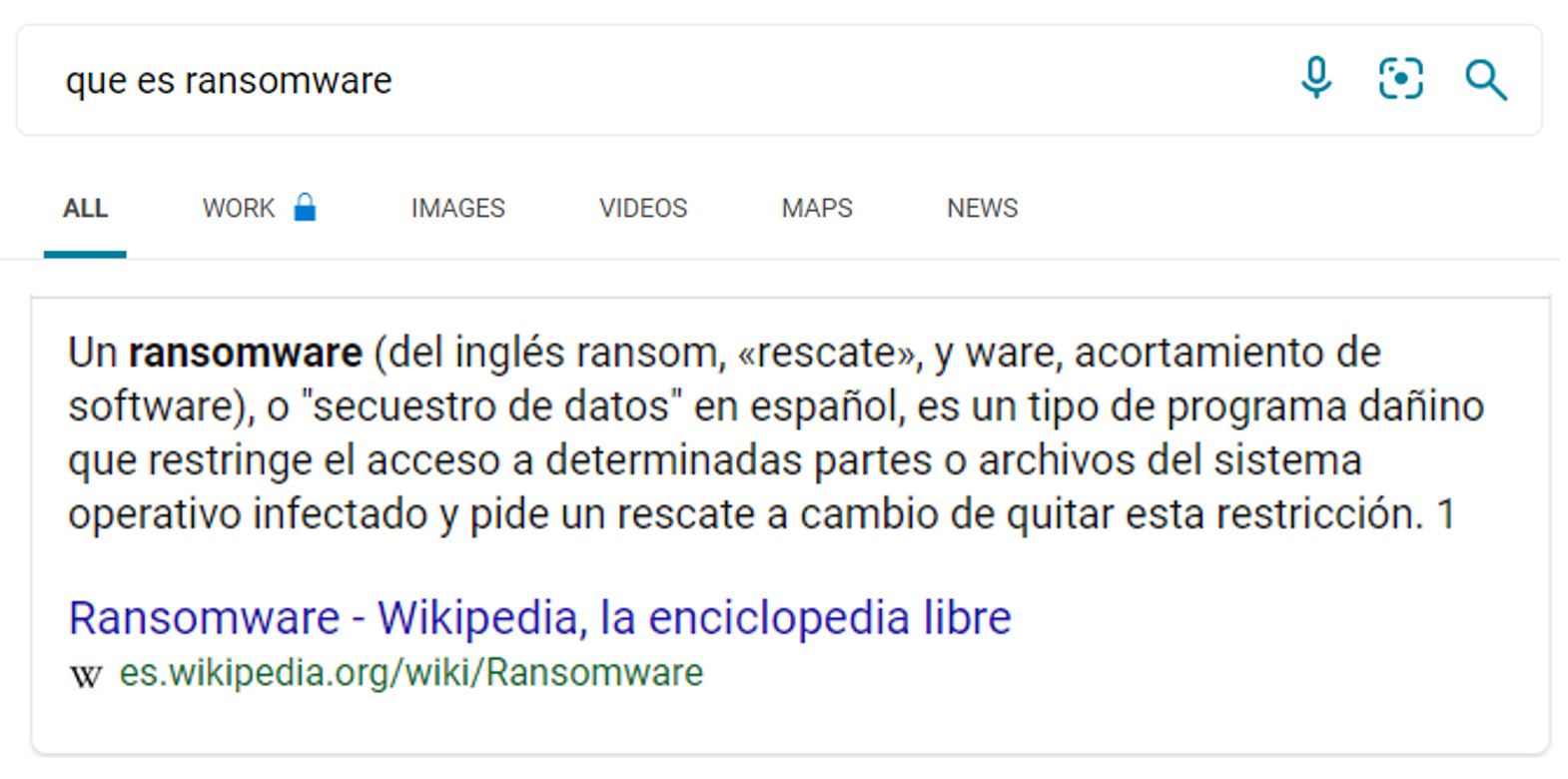

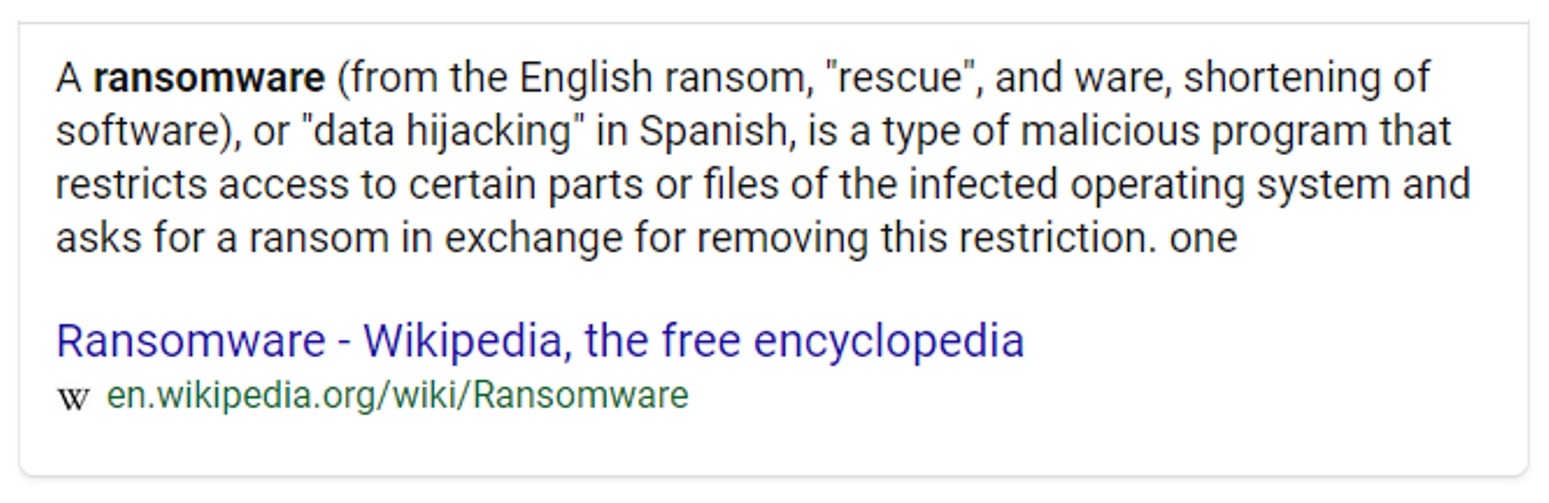

Query: {What is ransomware}

Original:

Translated:

Universal Semantic Highlighting in Captions

We are able to reuse the same models, pre-trained on 100+ languages and fine-tuned in a zero-shot fashion, to improve captions in all markets. These powerful models are enabling semantic highlighting, a new feature that expands highlighting in captions beyond simple keyword matching, helping users find information faster, without having to read through the entire snippet to look for an answer.

Previously, captions highlighting was highly dependent on matching the keywords present in the search query. However, keyword highlighting doesn’t maximize the experience when the query is a question, in which case it is much better to highlight the actual answer.

Highlighting the answer in a caption is similar to Stanford’s Machine Reading Comprehension test in which Microsoft was the first to reach human parity on the benchmark. With Universal Semantic Highlighting, we can identify and highlight answers within captions, and do it not just for English but for all languages.

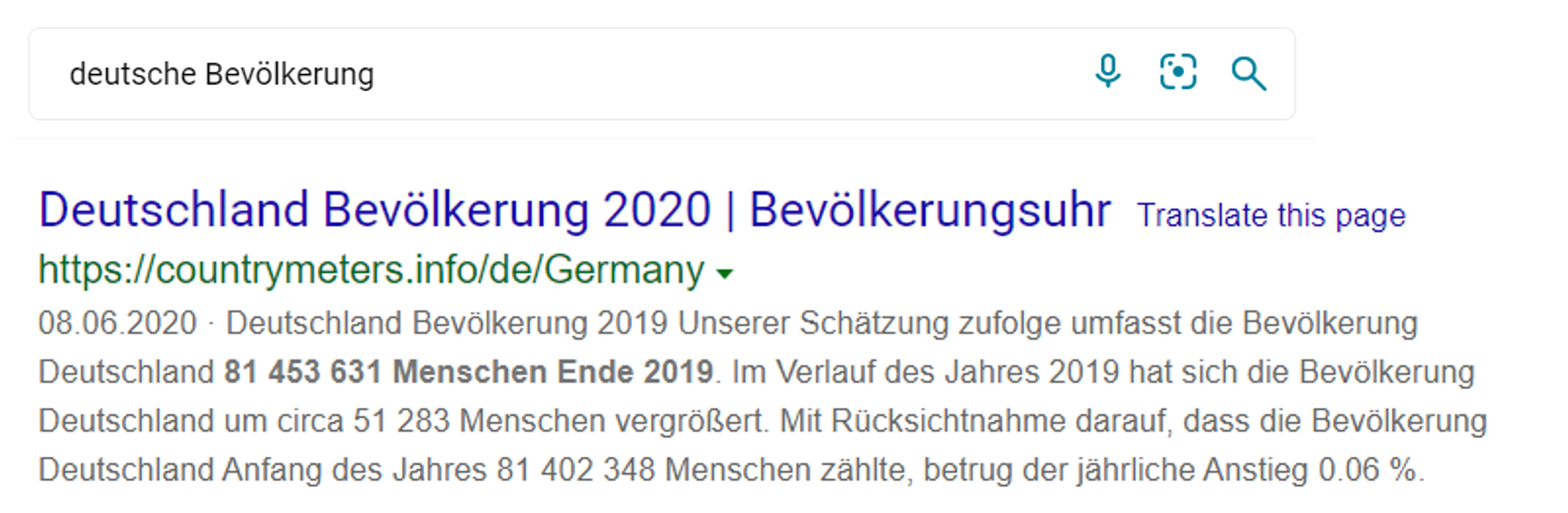

Below are a couple of examples that show how it works. The first query is {Germany population} and as you can see that instead of highlighting the words “Germany” and “population”, we highlight the actual figure of 81,453,631 as the population of Germany in 2019.

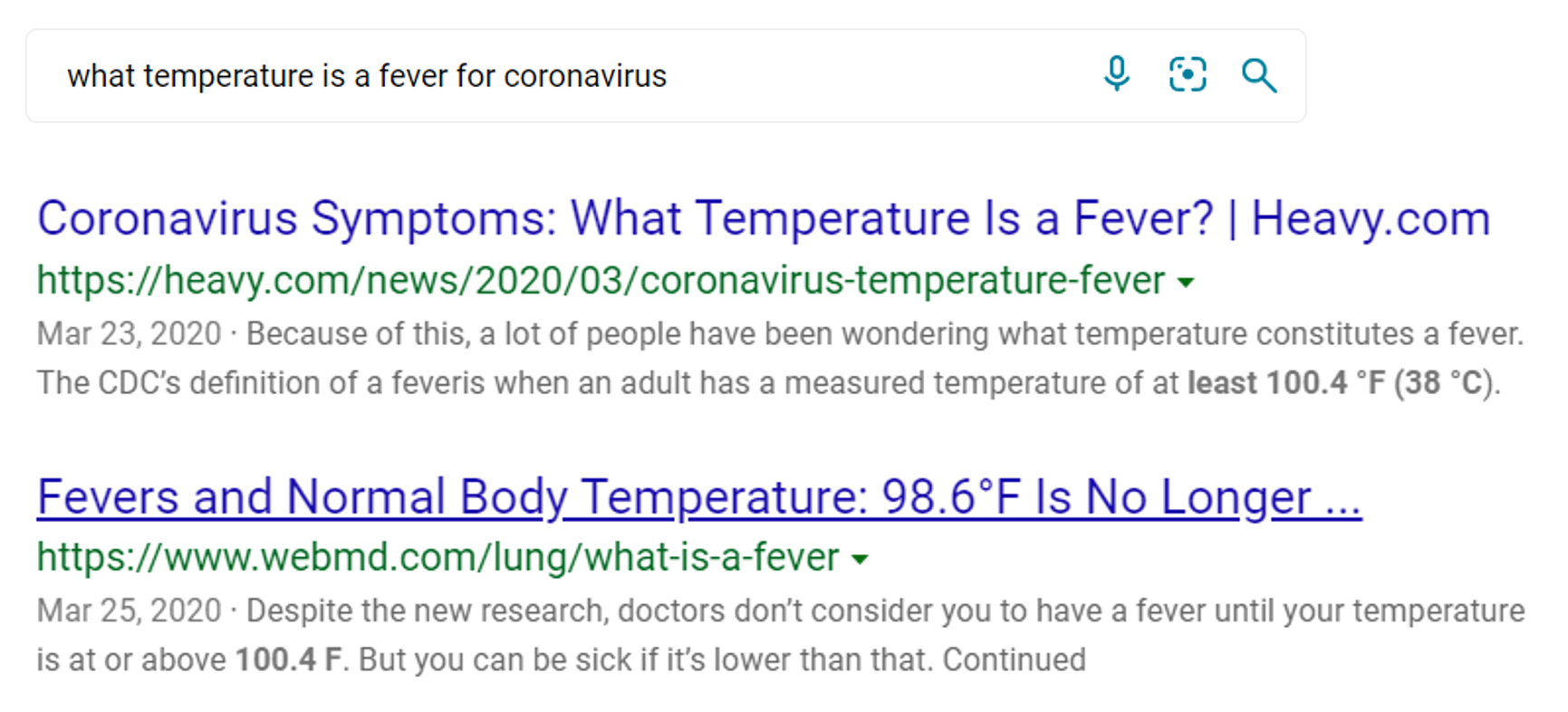

Here is another example for the query {what temperature is a fever for coronavirus}. We show multiple documents where the captions clearly highlight the actual answer of 100.4 oF as the definition of a fever.

Conclusion

Advancements in NLP continue to happen at a very rapid pace and Microsoft Turing models, both for language representation and generation, are at the cutting edge bringing the very best of deep learning capabilities and Microsoft’s innovation into its product family. But nothing makes our entire team prouder than bringing this innovation to our Bing users worldwide, helping them find the trustworthy answers they need, whatever language they speak and wherever they are on the planet.

We would love to hear your feedback or suggestions! You can submit them using the Feedback button in the bottom right corner of the search results page, or directly to our new Twitter alias @MSBing_Dev.