The popularity of GIFs has risen, in large part because they afford users a unique way to express their emotions. That said, it can be challenging to find the perfect GIF to express yourself. You could be looking for a special expression from a favorite celebrity, an iconic moment from a movie/tv show or just a way to say ‘good morning’ that’s not mundane. To provide the best GIF search experience Bing employs techniques such as sentiment analysis, OCR, and even pose modeling of subjects appearing in GIF flicks to reflect subtle intent variations of your queries. Read on to find out more about how we made it work, and experience it yourself on Bing’s Image search by typing a query like angry panda. Here are some of the techniques we’ve used to make our GIF search more intelligent.

Vector Search and Word Embeddings for Images to Improve Recall

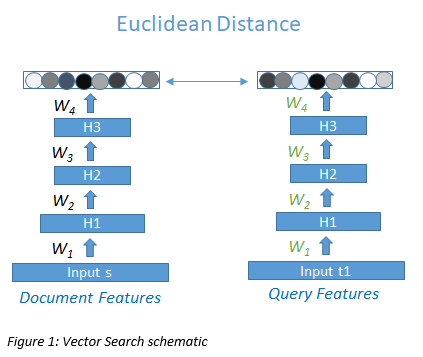

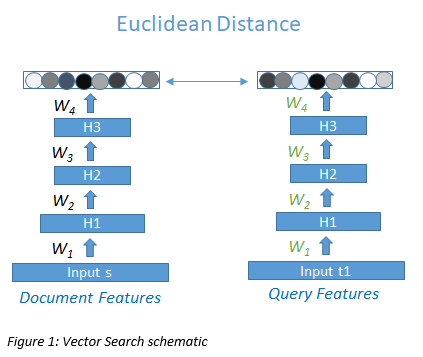

As we’ve previously done with Image Ranking, and Vector Based Search, we’ve gone beyond simple keyword matching techniques, and captured underlying semantic relationships. Using text and image embedding vectors, we first mapped the queries and images into a high-dimensional vector space and used similarity to improve recall. In simple words, vector-based search teaches the search engine that words like “amazing” “great”, “awesome”, and “fantastic” are semantically related. This allows us to retrieve documents not just by exact match, but also by match with semantically related terms.

GIF Summarization and OCR algorithms for improving precision

One reason GIF Search is more complicated than image search is that a GIF is composed of many images (frames), and therefore, you need to search through multiple images and not just the first, to check for relevance. For instance, a search for a cat GIF, a celebrity or a tv show or cartoon character GIF needs to ensure that the subject occurs in multiple frames of the GIF and not just the first. That’s complicated. Moreover, many users include phrases in their queries like “hello”, “good morning”, “Monday mornings” etc. – where we need to ensure that these textual messages are also included in the GIF. That’s complicated too, and that’s where our Optical Character Recognition (OCR) system comes into play. We use a deep-neural-network-based OCR system and we’ve added synthetic training data to better adapt to the GIF scenario.

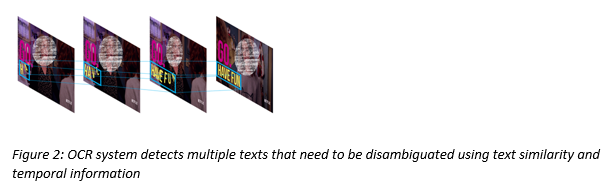

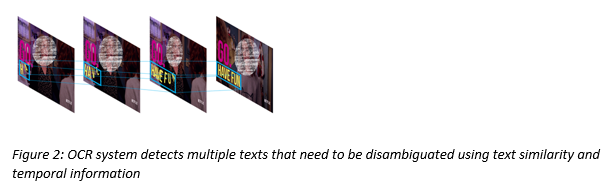

The multi-frame nature of a GIF introduces additional complexities for OCR as well. For example, an OCR system would look at the images below, and detect four different pieces of text – “HA”, “HAVE”, “HAVE FU” and “HAVE FUN”. In fact there’s just one piece of text – “HAVE FUN”. We use text similarity combined with spatial and temporal information to disambiguate such cases.

The multi-frame nature of a GIF introduces additional complexities for OCR as well. For example, an OCR system would look at the images below, and detect four different pieces of text – “HA”, “HAVE”, “HAVE FU” and “HAVE FUN”. In fact there’s just one piece of text – “HAVE FUN”. We use text similarity combined with spatial and temporal information to disambiguate such cases.

Sentiment Analysis using text – to improve results quality

A common scenario for GIF search is emotion queries – where users are searching for GIFs that match a certain emotion (searches like “happy”, “sad”, “awesome, “great”, “angry” or “frustrated”). Here, we analyze the sentiment/emotion of the GIF query and try to provide GIF results that have matching sentiment. Query sentiment analysis is complicated because there are usually just 2-3 terms in queries, and they don’t always reflect emotions. To understand query sentiment, we’ve analyzed public community websites and learned billions of relationships between text and emojis.

To understand the sentiment for GIF documents, we analyze the text that surrounds the GIF documents on web pages. Having the sentiment for both the query and documents, we can match the sentiment of the user query and the results they see. For instance, if a user issues the query “good job”, and we’ve already detected text like “Good job 😊 😊 ” on chat sites, we would infer that “Good job” is a query with positive sentiment and choose the GIFs documents with positive sentiment.

To understand the sentiment for GIF documents, we analyze the text that surrounds the GIF documents on web pages. Having the sentiment for both the query and documents, we can match the sentiment of the user query and the results they see. For instance, if a user issues the query “good job”, and we’ve already detected text like “Good job 😊 😊 ” on chat sites, we would infer that “Good job” is a query with positive sentiment and choose the GIFs documents with positive sentiment.

Expressiveness, Pose and Awesomeness using CNNs

Celebrities are a major area of GIF searches. Given users are utilizing GIFs to express their emotions, it is a basic requirement that the top ranked celebrity GIFs convey strong emotions. Selecting GIFs that contain the right celebrity is easy but identifying GIFs that contain strong emotions or messages is hard. We do this by using deep learning models to analyze poses, actions, and expressiveness.

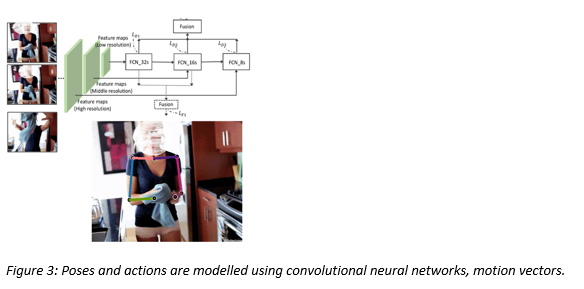

Poses and actions

Poses can be modeled using the positions of skeleton points, such as head, shoulder, hand etc. Actions can be modeled using the motion of these points across frames. To extract features to depict human poses and actions, we estimate the skeleton point positions in each frame and estimate the motion across adjacent frames. A full convolutional network is deployed for estimating each skeleton point of the upper body. The motion vectors of these skeleton points are extracted to depict the motion information. A final model deduces the ‘awesomeness’ by examining the poses and the actions in the GIF.

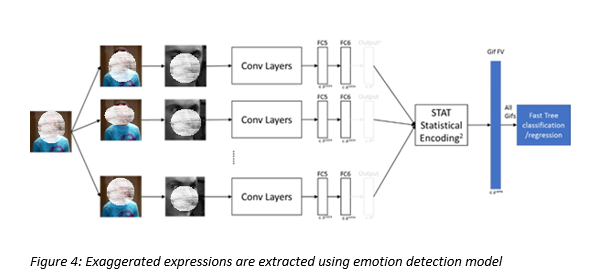

Exaggerated expressions

Here we analyze the facial expression of the subject to select results with more exaggerated facial expressions. We extract the expressions from multiple frames of the GIF and compute a score that indicates the level of exaggeratedness. Our GIF search returns results that have more exaggerated facial expressions.

By pairing deep convolutional neural networks, expressiveness, poses, actions and exaggeratedness models with our huge celebrity database, we can return awesome results for celebrity searches.

Image graph and other techniques

In addition to helping understand semantic relationships, Image Graph also improves ranking quality. Image Graph is made up of several clusters of similar images (in this case, GIFs), and has historical data (for e.g. clickthrough rate etc.) for images. As shown in the graph below, the images within the same cluster are visually similar (the distance between images denotes similarity), and the distance between the clusters denotes visual similarity of the main images within the clusters. Now, if we know that an image in cluster D was extremely popular, we can propagate that clickthrough rate data to all other GIFs in cluster D. This greatly improves ranking quality. We can also improve the diversity of the recommended GIFs using this technique.

Finally, we also consider source authority, virality and popularity while deciding which GIFs to show on top. And, we have a detrimental content classifier (based on images, and another based on text) to remove offensive content to ensure that all our top results are clean.

There you have it – did you really imagine that so many machine learning techniques are required to make GIF ranking work? Altogether, these components bring intelligence to Bing’s GIF search experience, making it easier for users to find what they’re looking for. Give it a try on Bing image search.