In recent years, much ground-breaking work in image and video retrieval, auto-captioning, and visual question answering has ensued because of the development of transformer-based techniques for computer vision and natural language processing. However, applying similar techniques and the same models to a web-scale search engine to retrieve images and video in response to a text query is more complicated. The difficulty arises from searching through billions of images to retrieve relevant and aesthetically pleasing photos efficiently and quickly in the context of specific text search queries.

In 2019, we discussed how Bing's image search continues to evolve toward a more intelligent and precise search engine through multi-granularity matches, enhanced understanding of user queries, images, webpages, and their relationships. The vector-matching, attribute-matching, and Best Representative Query matching techniques assist in resolving a variety of complex cases, such as when users search for objects with specific context or attributes, such as {blonde man with a mustache} or {dance outfits for girls with a rose}[1].

Building upon previous innovation, we developed end-to-end multi-modality deep learning models to improve image search results event further.

Rank Multi Modal (RankMM) Models

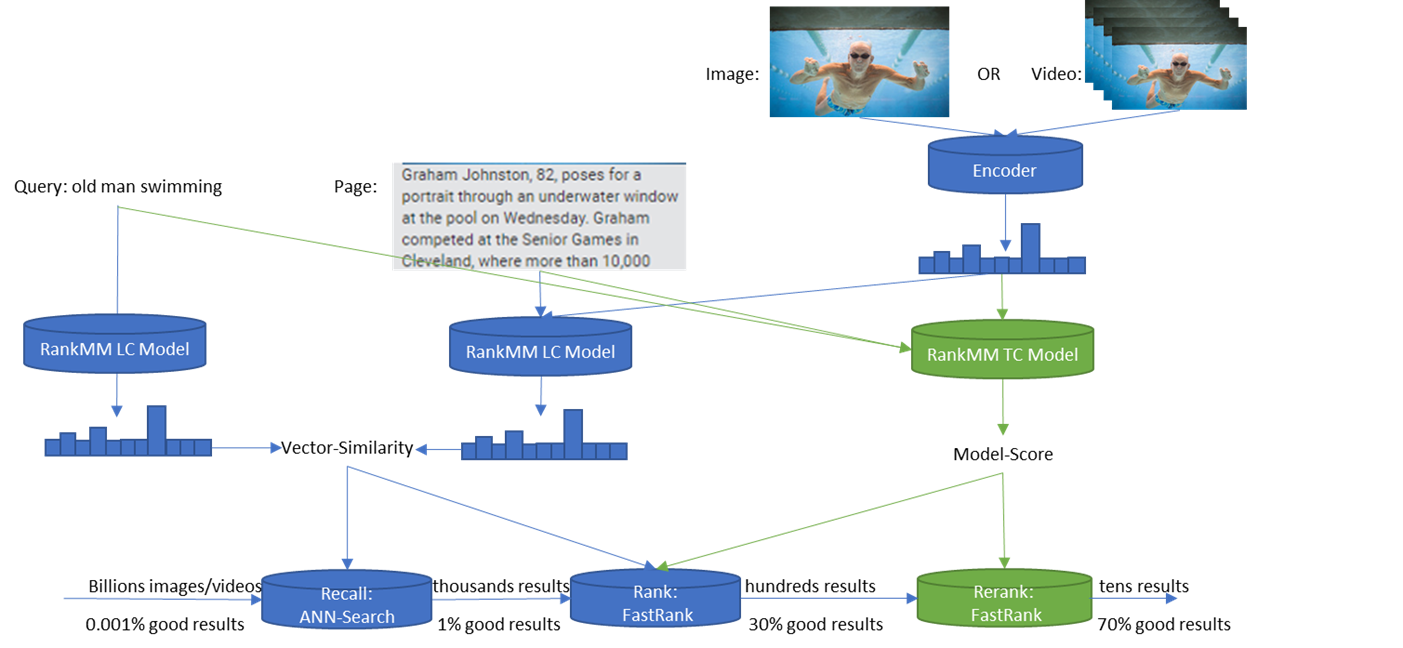

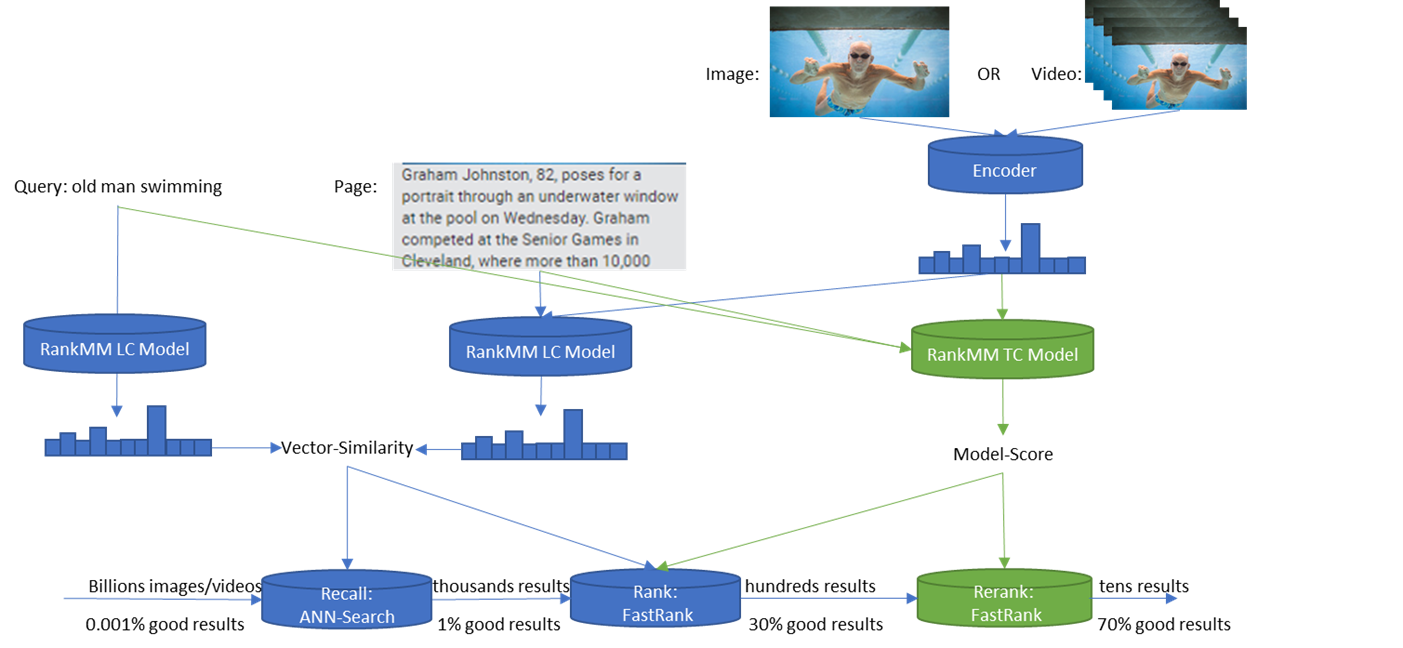

RankMM models are Visual Language (VL) models which take page context into account to improve image and video retrieval performance in a web-scale search engine. RankMM is a deep ranking model leveraging query, image/video, and the associated webpage to predict image/video relevance score. The RankMM model effectively combines the search paradigms of a text query, page context, and images to aid in image and video retrieval. Additionally, it enables different models to adapt to the cascading framework's various layers; models in each layer can use different model structures, training data, and training tasks.

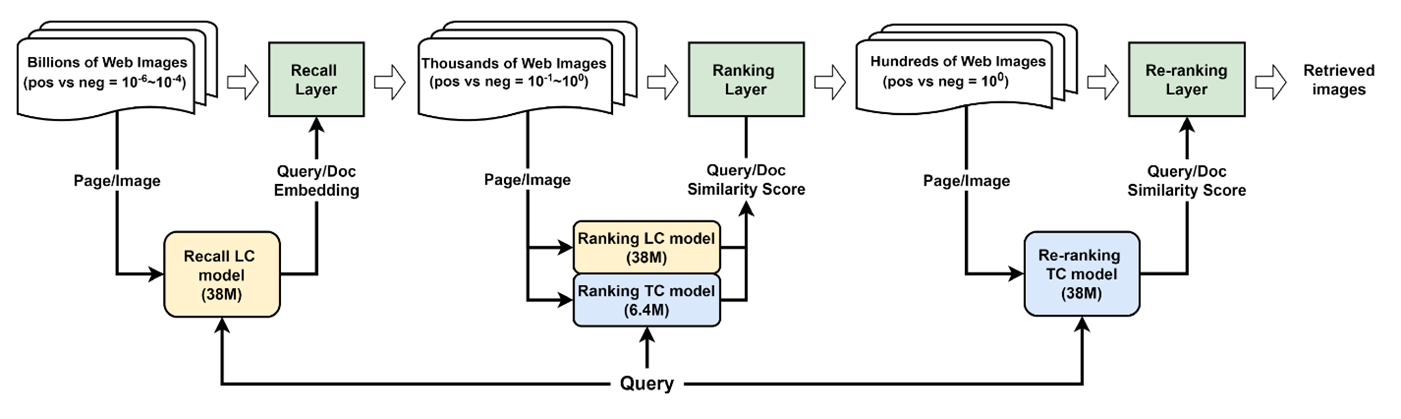

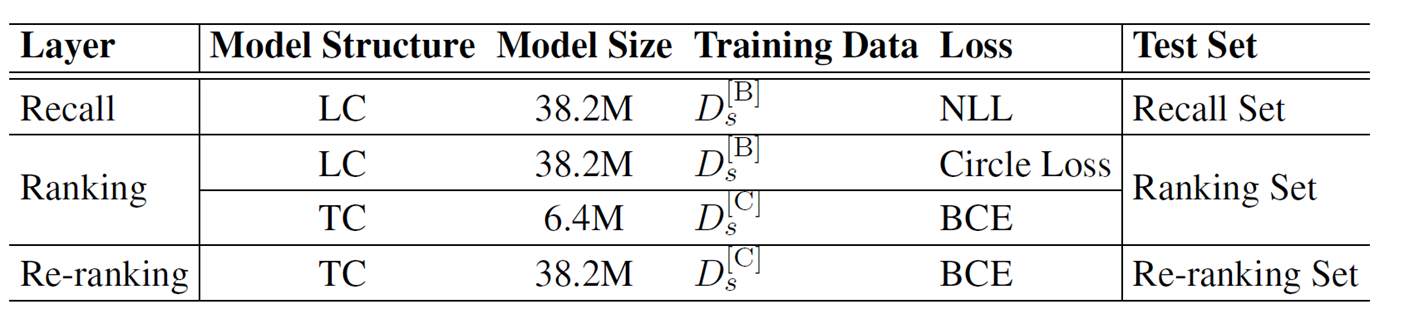

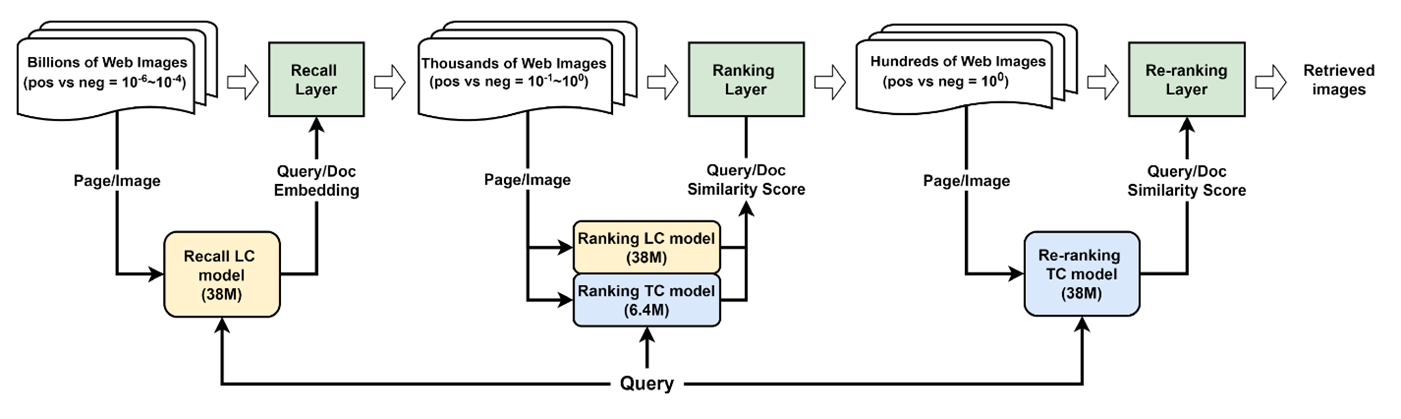

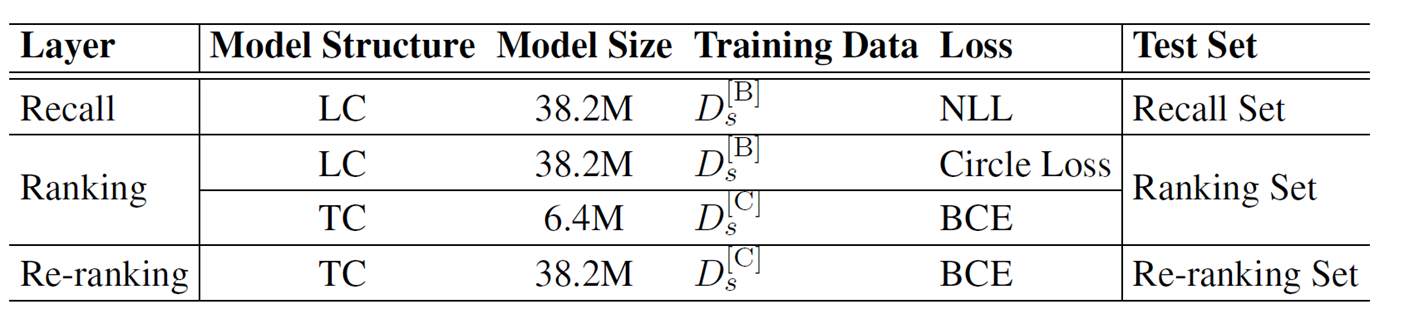

The cascade framework of highly efficient and cost-effective deep learning models for efficient image retrieval consisting of a recall layer, a ranking layer, and a re-ranking layer. The result set is gradually narrowed through each layer, and the proportion of relevant and irrelevant images and videos gradually increases.

We trained the models using a small amount of human-labeled data and large-scale semi-supervised data. First, we collected queries and top 1K image results to form billions of <Q, P, I> triplets. Then, we applied weighted sampling to the whole corpus to generate universal training data and removing the language bias. Next, we generated binary labels by treating the top 10 results as positive samples and the rest as negative. We also produced continuous labels by training a teacher model. For human labeling, we used a stratified sampling approach with each language to select data and graded each <Q, I> pair on three relevance levels, Excellent, Good, and Bad.

Take a look yourself how deep multimodality models in image search ranking stack helps in improving user experiences and search performance on bing.com/images for your search query and in Visual Search.

Share your experiences and provide feedback so that we can continue to improve our models.

- Edward Cui, Lin Su, Yu Bao, Aaron Zhang, Ashish Jaiman, and The Bing Multimedia team.

The cascade framework of highly efficient and cost-effective deep learning models for efficient image retrieval consisting of a recall layer, a ranking layer, and a re-ranking layer. The result set is gradually narrowed through each layer, and the proportion of relevant and irrelevant images and videos gradually increases.

Search Stack - Cascading Framework

The cascaded framework aims to resolve the complexity of retrieving billions of images and videos with high relevancy and runtime latency needs of a web-scale search engine. The framework has separate components: Recall layer, Ranking layer, and Re-ranking layer. The result set is narrowed sequentially through each layer to retrieve images in the most efficient manner possible.

Overview of the cascade search stack and visual language model in each layer

Recall Layer

The Recall layer's goal is to narrow down the result set from billions to thousands quickly. The proportion of positives and negatives in this layer is between O(10-6) and O(10-4). Traditional search engines use sparse word match, which builds an inverted index from documents and only recalls documents that contain all the query terms. However, in modern systems, dense vector match, using deep learning models to project query terms and documents in the same semantic space to calculate similarity, will return the highest similarity scores documents as search results. We combine the result set from both sparse word match and dense vector match as the input for the next layer in the recall layer for RankMM. For the dense vector match, we use a loosely coupled (LC) model in this layer. In the following section, we will go over the loosely coupled model.Ranking Layer

Following the Recall, the Ranking models will use predicted relevance scores to rank the thousands of images. In this layer, the ratio of positives to negatives is between O(10-1) and O(100). The ranking layer in traditional search engine systems is typically a learning-to-rank (LTR) model such as XGBoost[2] (Chen and Guestrin, 2016) or LamdaMART[3] (Burges, 2010). LTR models rank the document list based on hand-crafted features such as the number of matched terms between query and document, BM25[4] (Robertson et al.,2009), etc. To improve the ranking layer's quality, we create two transformer-based models, a loosely coupled (LC) model, and a tightly coupled (TC) model, and incorporate the predicted scores into the LTR model. Given that the recall output is still in the thousands of pixels and the search latency is typically in milliseconds, this approach should not be computationally expensive.Re-Ranking Layer

The re-ranking layer reorders hundreds of top results from the previous ranking layer to improve precision. This layer contains a roughly equal number of positives and negatives (100). This layer performs the re-ranking task using a list-wise LTR model[5] (Cao et al., 2007). It is distinct from the LTR model used in the ranking layer as its objective is to predict the optimal order of the list, rather than a single query-document pair's relevance score. Since the result set in this layer is much smaller, we can incorporate relatively heavier models to obtain better results.Model Structure

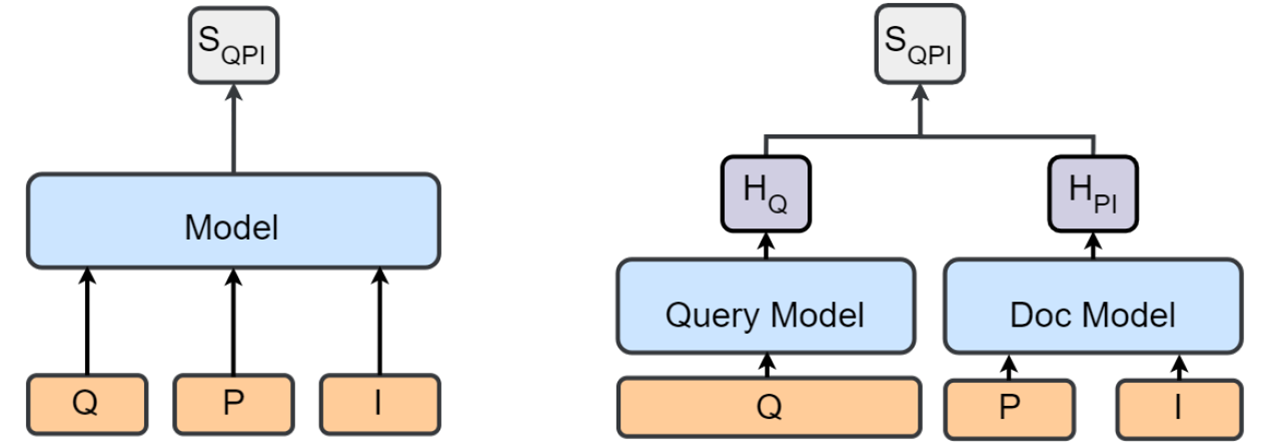

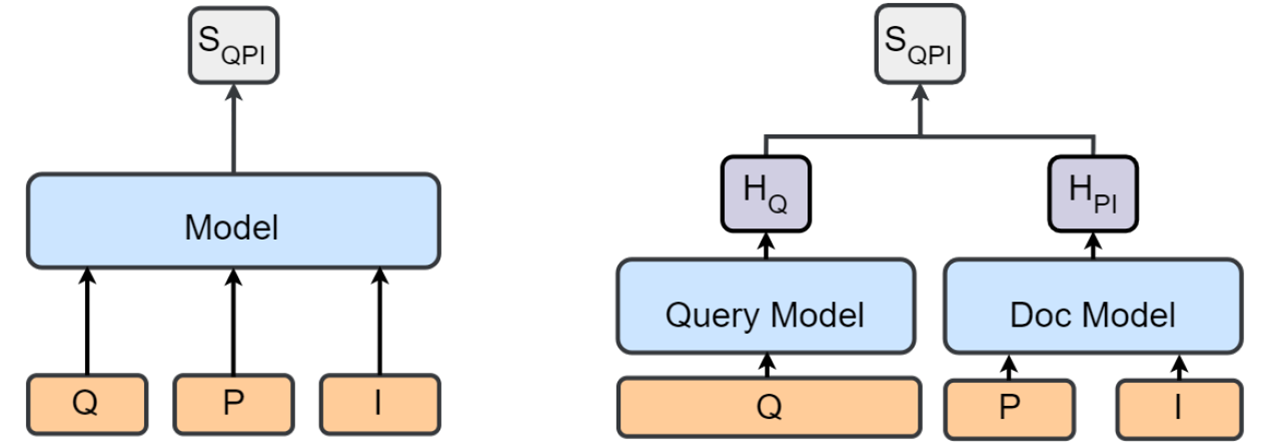

Our VL models take three inputs: a query (Q), a page (P), and an image (I) to determine the degree of similarity between each query and each document. Additional page and image information is extracted from the document for contexts, such as the page title and image caption. We categorize the model structures that are used in our search stack into tightly coupled model (TC) and loosely coupled model (LC).

Tightly Coupled

By combining the three inputs (P, Q, and I), the tightly coupled model (TC) generates a prediction score by learning the joint representation of all input modalities and inherently model their relationship. In a transformer-based structure, the attention layers are used to simulate the interactions of two tokens. The TC model can simulate the Q, P, and I relationships. However, it is typically more expensive at runtime because we must infer this model using all of the Q, P, and I input each time the user submits a new query.Loosely Coupled

In contrast to the TC model, in a loosely coupled model (LC), the query and other inputs are fed into two separate models, yielding a query model and a document model. Additionally, the doc model's input can be multi-modal, including both page and image input. The LC model outputs cosine distance between a query embedding vector and a document embedding vector. Thus, the TC model typically outperforms the LC model. We can compute the document embedding vectors for all documents in the search index in advance, store them, and then compute the query embedding vector during runtime, reducing computational cost and search latency. We call it a loosely coupled model because there is no direct interaction between the query and the document.

We trained the models using a small amount of human-labeled data and large-scale semi-supervised data. First, we collected queries and top 1K image results to form billions of <Q, P, I> triplets. Then, we applied weighted sampling to the whole corpus to generate universal training data and removing the language bias. Next, we generated binary labels by treating the top 10 results as positive samples and the rest as negative. We also produced continuous labels by training a teacher model. For human labeling, we used a stratified sampling approach with each language to select data and graded each <Q, I> pair on three relevance levels, Excellent, Good, and Bad.

Results

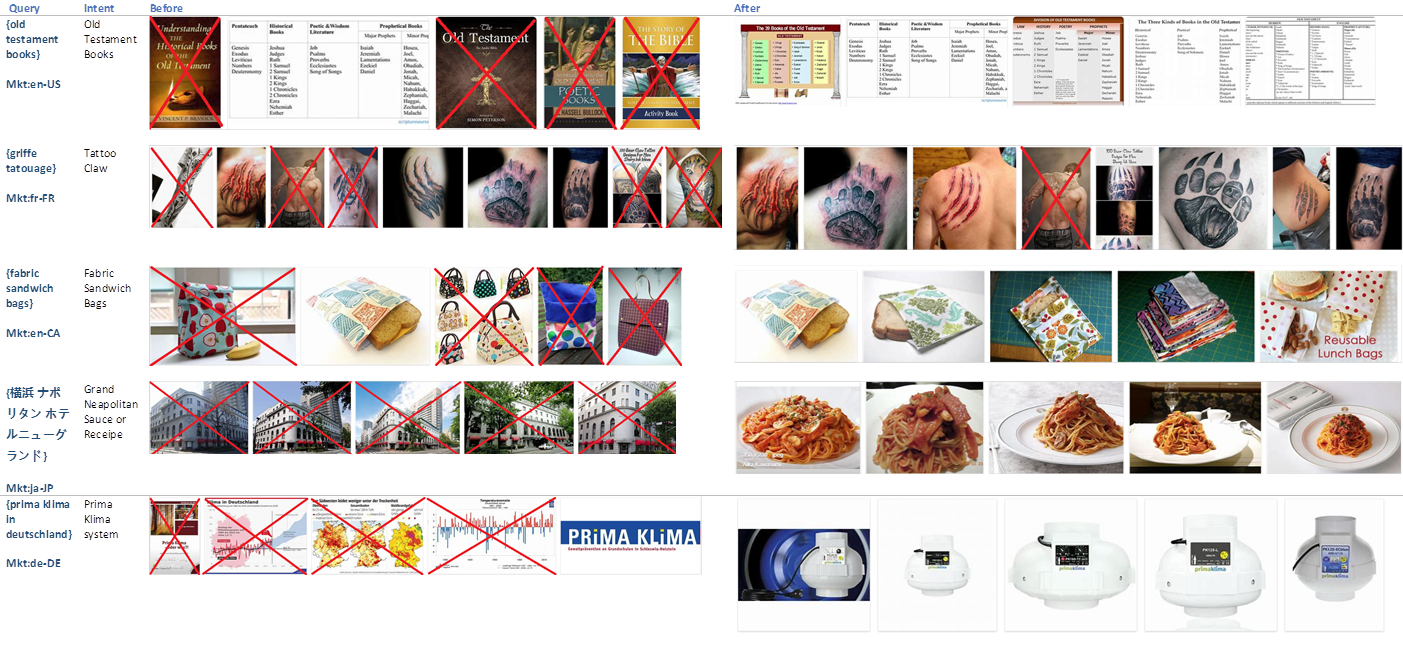

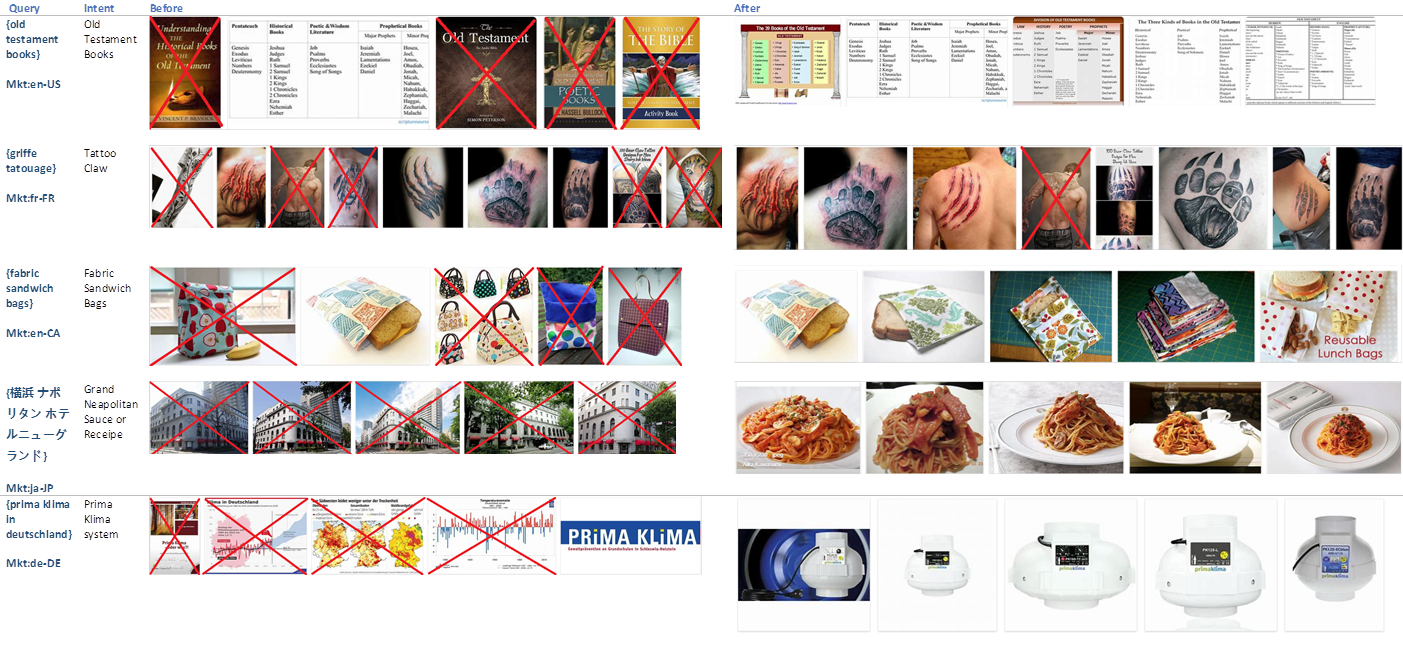

We examined several different model structures for fusing the three signals, which can be an excellent starting point for future research. Additionally, we apply distinct RankMM models to each layer in a cascaded framework to account for each layer's unique properties. We see quality improvements in each layer because of those models. RankMM models have contributed significantly to improvements in the core quality of image and video search in real-world scenarios over the last year.

Take a look yourself how deep multimodality models in image search ranking stack helps in improving user experiences and search performance on bing.com/images for your search query and in Visual Search.

Share your experiences and provide feedback so that we can continue to improve our models.

- Edward Cui, Lin Su, Yu Bao, Aaron Zhang, Ashish Jaiman, and The Bing Multimedia team.