Deep learning is helping to make Bing’s search results more intelligent. Here’s a peek behind the curtain of how we’re applying some of these techniques.

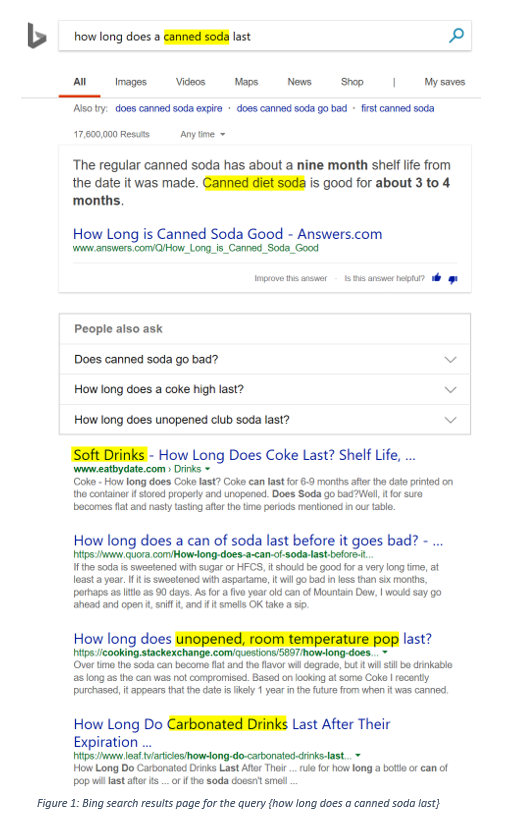

Last week, I noticed there were dates printed on my soda cans and I wanted to know what they referred to - sell by dates, best by dates or expiration dates?

.jpeg?lang=en-US)

Searching online wasn’t so easy, I didn’t know how to phrase my search query. For instance, searching for {how long does canned soda last} may miss other relevant results including those that use synonyms for soda like 'pop' or 'soft drink'. This is just one example where we struggle to pick the right terms to get the search results we want. Consequently, we must search multiple times to get the best results! But, with deep learning we can help solve this problem.

Different Queries, Similar Meaning: Understanding Query Semantics

Traditionally, to satisfy the search intent of a user, search engines find and return web pages matching query terms (also known as keyword matching). This approach has helped a lot throughout the history of search engines. However, as users start “speaking their queries” to intelligent speakers, to their phones and become more comfortable expressing their search needs in natural language, search engines need to become more intelligent and understand the true meaning behind a query – its semantics. This lets you see results from other queries with similar meaning even if they are expressed using different terms and phrases

From Bing’s search results, while my original query uses the terms {canned soda}, I realize that it can also refer to {canned diet soda} , {soft drinks}, {unopened room temperature pop} or {carbonated drinks}. I can find a comprehensive list of web pages and answers in a single search without issuing multiple variants of the original query – saving me time from the detective work of figuring out the soda industry!

Why is a deeper understanding of query meaning interesting?

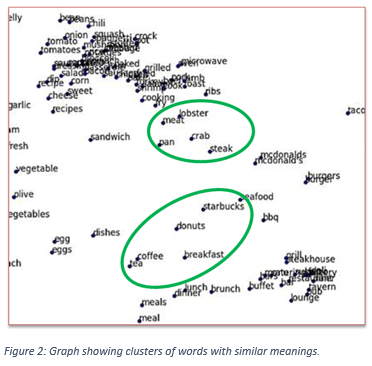

Bing can show results from similar queries with the same meaning by building upon recent foundational work where each word is represented as a numerical quantity known as vector. This has been the subject of previous work such as word2vec or GloVe. Each vector captures information about what a word means – its semantics. Words with similar meanings get similar vectors and can be projected onto a 2-dimensional graph for easy visualization. These vectors are trained by ensuring words with similar meanings are physically near each other. We trained a GloVe model over Bing’s query logs and generated 300-dimensional vector to represent each word.

You can see some interesting clusters of words circled in green above. Words like lobster, meat, crab, steak are all clustered together. Similarly, Starbucks is close to donuts, breakfast, coffee, tea, etc. Vectors provide natural compute-friendly representation of information where distances matter: closer is good, farther is bad, etc.

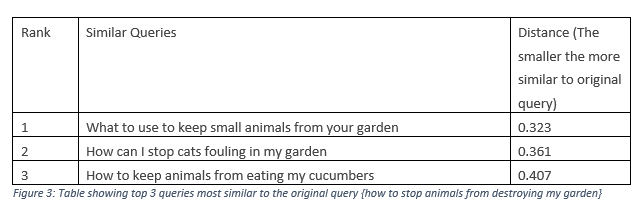

Once we were able to represent single words as vectors, we extended this capability to represent collections of words e.g. queries. Such representations have an intrinsic property where physical proximity corresponds to the similarity between queries. We represent queries as vectors and can now search for nearest neighbor queries based on the original query. For example, for the query {how to stop animals from destroying my garden}, the nearest neighbor search leads to the following results:

As can be seen, the query {how to stop animals from destroying my garden} is close to {how can I stop cats fouling in my garden}. One could argue that this type of “nearby” queries could be realized by traditional query rewriting methods. However, replacing “animals” with “cats”, and “destroying” with “fouling” in all cases would be a very strong rewrite which would either not be done by traditional systems or, if triggered, would likely produce a lot of bad, aggressive rewrites. Only when we capture the entire semantics of the sentence can we safely say that the two queries are similar in meaning.

Since web search involves retrieving the most relevant web pages for a query, this vector representation can be extended beyond just queries to the title and URL of webpages. Webpages with titles and URLs that are semantically similar to the query become relevant results for the search. This semantic understanding of queries and webpages using vector representations is enabled by deep learning and improves upon traditional keyword matching approaches.

How is deeper search query understanding achieved in Bing?

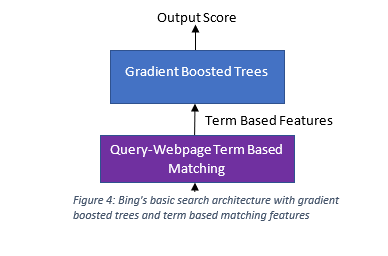

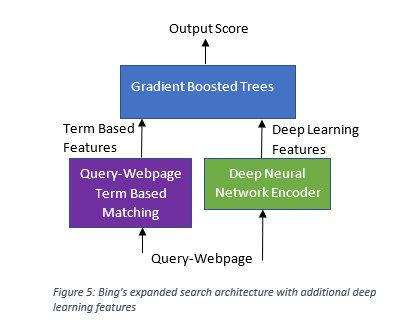

Traditionally, for identifying best results, web search relies on characteristics of web pages (features) such as number of keyword matches between the query and the web page’s title, URL, body text etc. defined by engineers. (This is just an illustration. Commercial search engines typically use thousands of features based on text, links, statistical machine translation etc.). During run time, these features are fed into classical machine learning models like gradient boosted trees to rank web pages. Each query-web page pair becomes the fundamental unit of ranking

Deep learning improves this process by allowing us to automatically generate additional features that more comprehensively capture the intent of the query and the characteristics of a webpage.

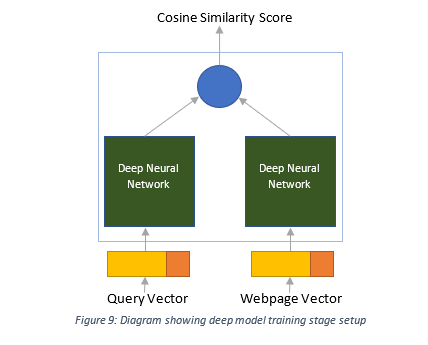

Specifically, unlike human-defined and term-based matching features, these new features learned by deep learning models can better capture the meaning of phrases missed by traditional keyword matching. This improved understanding of natural language (i.e. semantic understanding) is inferred from end user clicks on webpages for a search query. The deep learning features represent each text-based query and webpage as a string of numbers known as the query vector and document vector respectively.

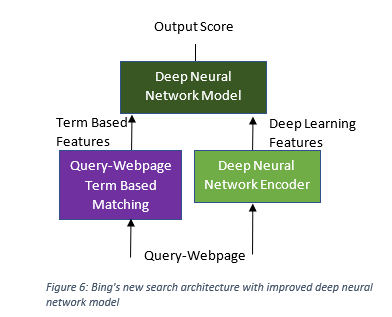

To further amplify the impact of deep learning features, we replaced the classical machine learned model with a deep learning model to do the ranking itself as well. This model runs on 100% of our queries meaningfully affecting over 40% of the results, and it achieves a runtime performance of a few milliseconds through custom, hardware specific model optimizations.

Deep neural network technique in Bing search

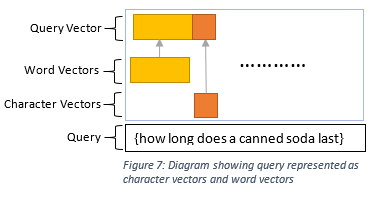

Looking closer at the Deep Neural Network Model and Encoder in the context of our first example query reveals that each character from the input text query is represented as a string of numbers (“character vector”). In addition, each word in the query is also separately trained to represent word vector. Eventually, the character vectors are joined with the word vectors to represent the query.

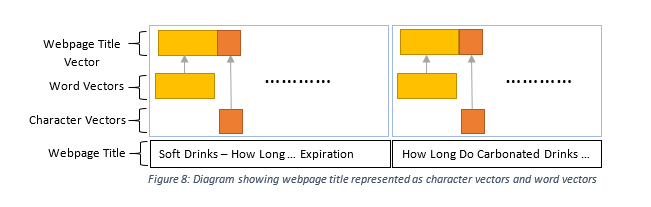

In the same way we can represent each web page’s title, URL, and text as character and word vectors.

The query and webpage vectors are then fed into a deep model known as convolutional neural network (CNN). This model improves semantic understanding and generalization between query and webpages and calculates the Output Score for ranking. It is achieved by measuring the similarity between a query and a webpage’s title, URL etc. using a distance metric, for example, cosine similarity.

Conclusion

Thanks to the new technologies enabled with deep learning, we can now go way beyond simple keyword matches in finding relevant information for user queries.

As with our initial example of {how long does a canned soda last}, you can find out that soda is also called 'pop' in the United States, or 'fizzy drink' in England while scientifically, it's referred to as a carbonated drink. From the soft drink industry perspective, it’s also useful to note the difference between diet and non-diet sodas. Most diet sodas start to lose quality about 3 months after the date stamped, while non-diet soda will last about 9 months after the date – a deeper industry level insight which may not be common knowledge for the average person! By applying deep learning for better semantic understanding of your search queries, you can now gain comprehensive, fast insights from diverse perspectives beyond your individual human experiences. Take it for a spin and let us know what you think using Bing Feedback!

From the Search and AI team (In alphabetical order):

Applied Scientists: Aniket Chakrabarti, Jane Kou, Xiaoqiu Huang

Senior Applied Scientist Tech Leader: Chen Zhou

Technical Program Manager: Chun Ming Chin