Over the past few months Bing shopping and recipes experiences have benefited from multiple enhancements impacting visual richness and overall quality. In the last multimedia post (The Image Graph - Powering the Next Generation of Bing Image Search) we talked about how we construct the Image Graph offline powering the backend of our experiences. So let’s talk more about the online and front-end part of this equation—features we created to bring the experience closer to the user—and how they connect to the offline graph we described in the prior blog post.

So let’s talk more about the online and front-end part of this equation—features we created to bring the experience closer to the user—and how they connect to the offline graph we described in the prior blog post.

Shopping with Images

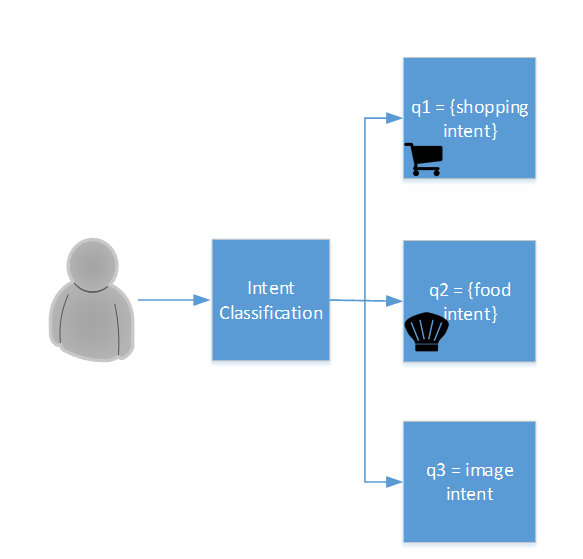

As we monitored usage patterns and feedback, we realized that users intent varies greatly for image searches, with the core use case of ‘search for images to look at’ being just one. Two of the most common scenarios that emerged are shopping and searching for recipes, which are two features we recently shipped. Our ambition is to help users complete any of these in an intuitive and satisfying way. Bing, based on detected query intent, would do the hard work of optimizing the presented interface, and including most relevant knowledge from the web.

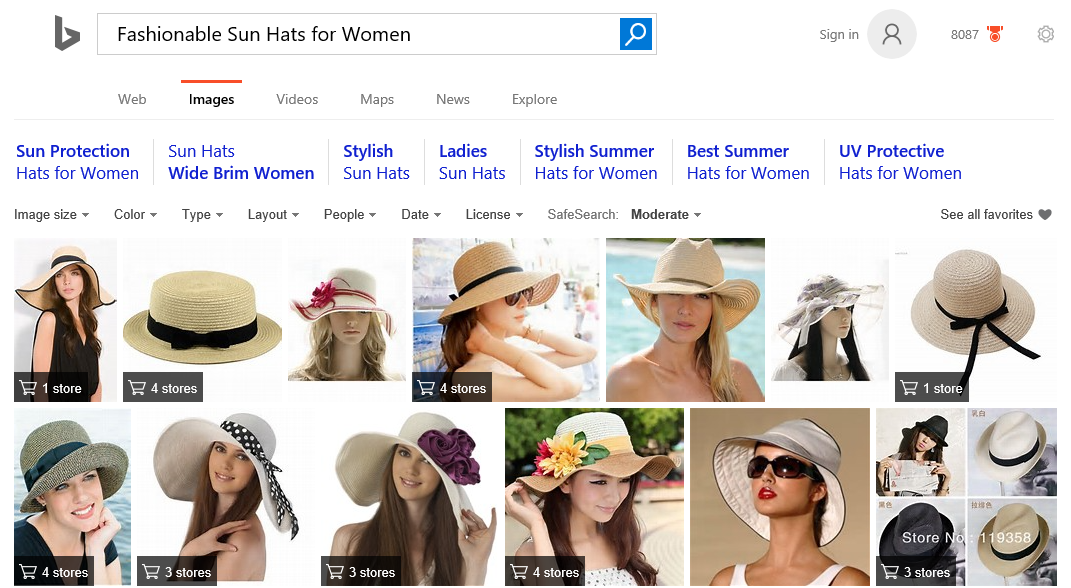

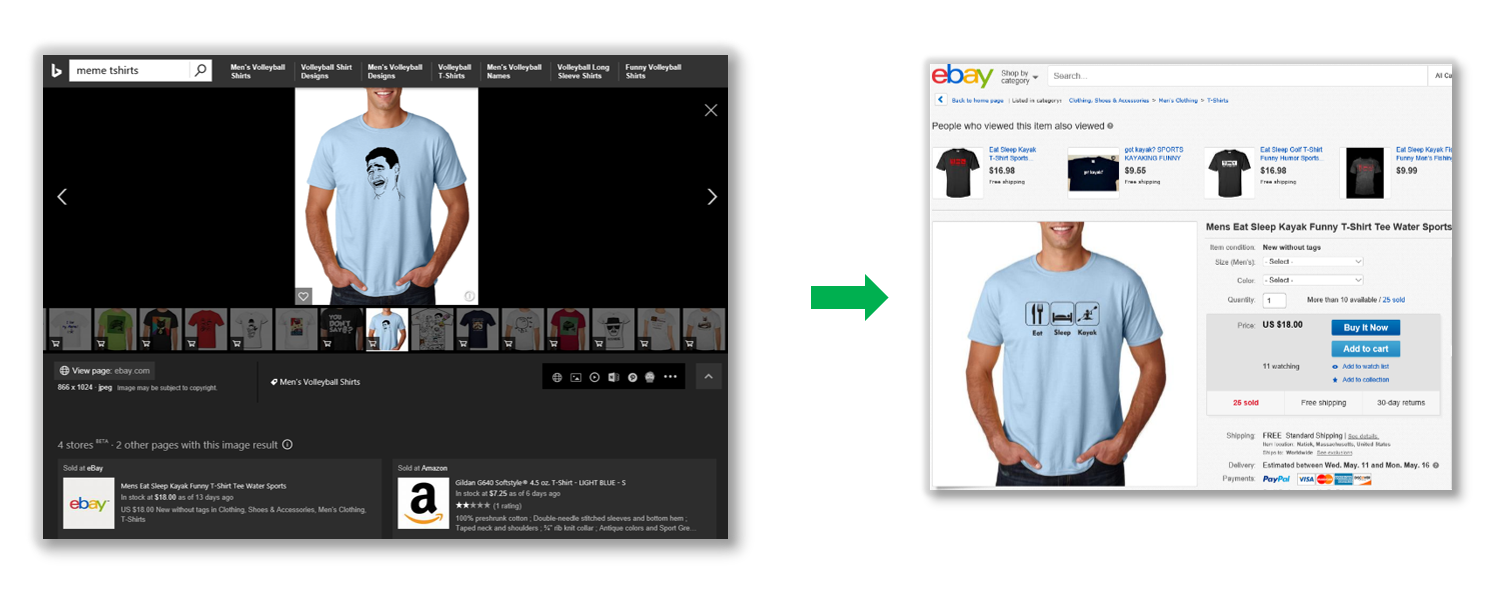

When you issue a query with a shopping intent, referencing items that are usually shopped for 'visually,' you will see certain results tagged with shopping cart badges.

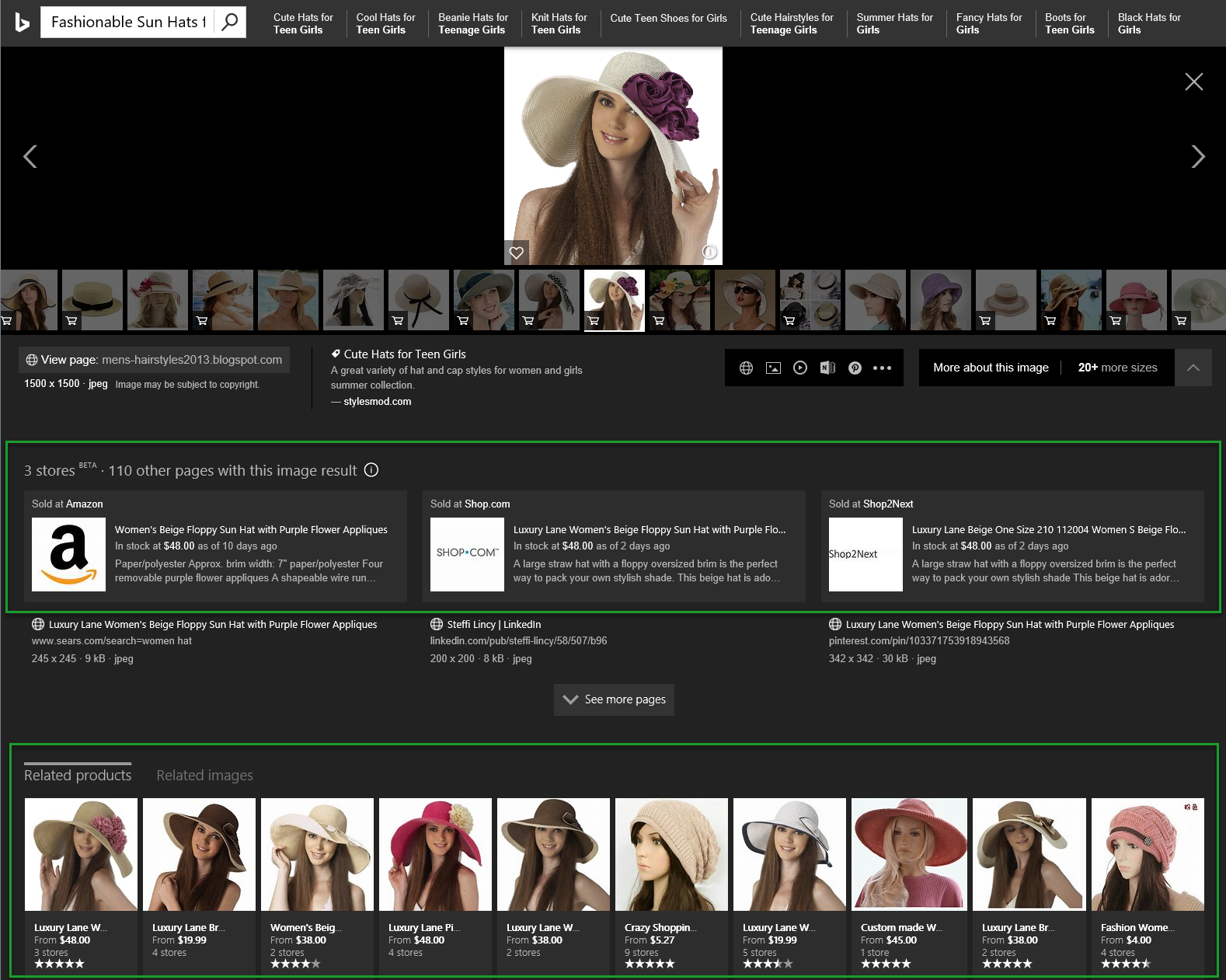

The badges indicate that we've been able to locate relevant shopping pages offering given item for sale. When you click on the thumbnail and scroll down (or just click on a link embedded in the badge), you will see image details along with links to pages where the item can be bought, and with related products links if available (the latter to be covered at more depth in one of the upcoming blogs).

Query Intent

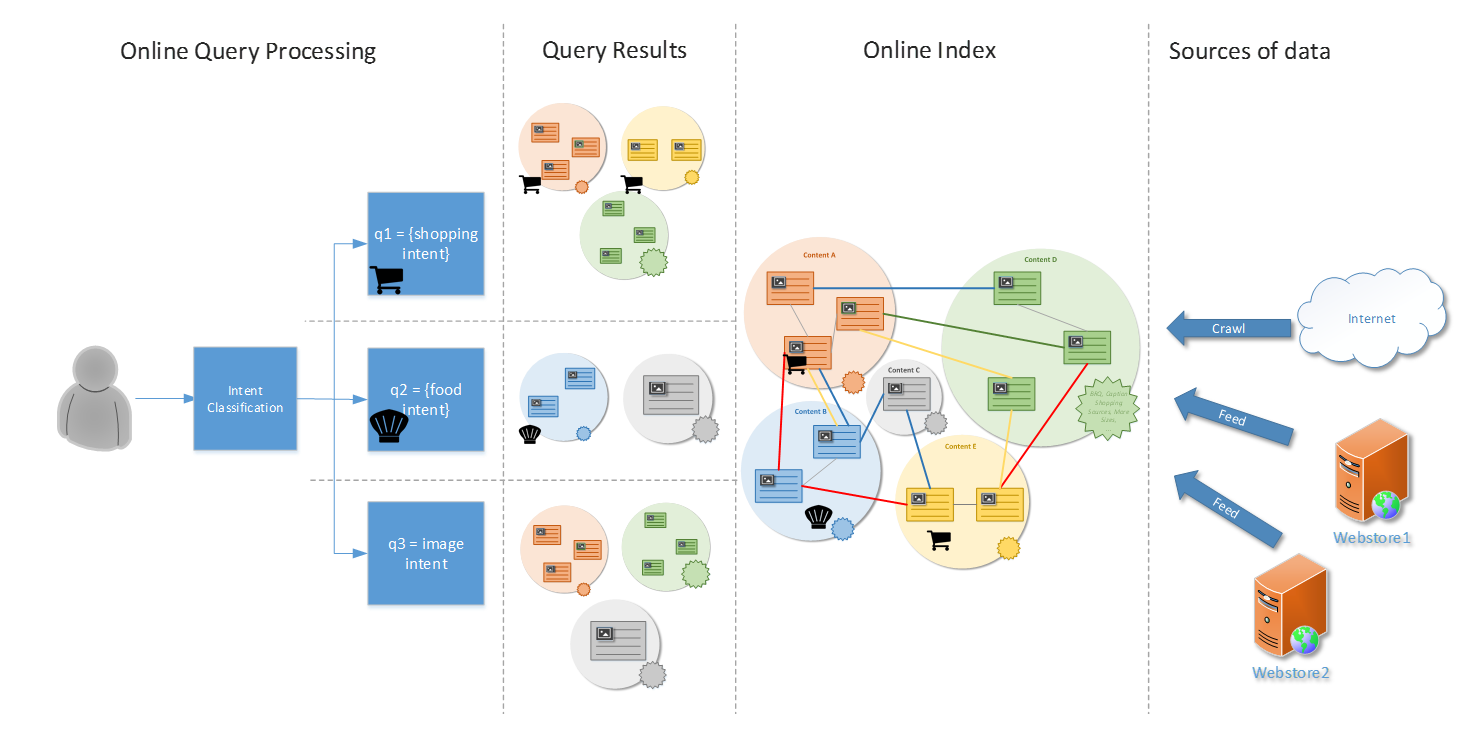

In order to make it all work we had to start at the beginning—by understanding better the types of queries that would have high likelihood of shopping intent, and discerning where users would find the shopping-cart badge most useful. We studied query logs and engagement data to identify query patterns most frequently leading to purchases or visits to retail pages. We built corresponding machine learned models to try to predict shopping intent for queries.

As we were testing internally initial versions, we monitored their performance and we learned. Our early attempts were too trigger-happy: We were displaying shopping badges for such queries as 'pope' or ’president Obama' :) Puzzled, we investigated deeper and discovered that what was for sale in those cases were simply posters, mugs or t-shirts related to the corresponding people. Items of this kind appeared to be quite popular with our users, but not expected for this type of query. Subsequent iterations improved our triggering significantly, making it worthy of exposing to our users. Once released, we continue to monitor, evaluate and refine our models.

Content and Intent

Identifying target queries is definitely important but that is only a part of the puzzle. Another key ingredient needed are actual shopping pages, and corresponding ranking. We developed ways of evaluating quality of shopping pages taking into account such things as availability, pricing information, informative pictures of the product, product reviews, prominence of the domain and more.

As part of this we used a number of cutting-edge technologies to help us extract and organize this information. We relied on structured information embedded in pages using standards such as schema.org and Open Graph for discovery of product shopping pages. To cover cases where explicit product meta-information was not directly published we developed other creative ML-based approaches to extract accurate information, to automatically decide whether given page is a shopping page, and if so, of what quality.

In fact, we also developed a complete API to allow merchants and webmasters to plug their catalogs into our crawler. If you own a web store and would like the Bing crawler to consider your product pages for our Bing shopping experience, just follow the steps described here: Being a Part of the Bing Image Search Ecosystem. This page may also serve as a helpful reference: Bing Image Data Feed.

Our goal was to identify the largest possible number of pages offering a given item for sale, then from these pick the best offers to be presented to our users based multiple criteria. Note that this is quite a different approach than simply showing ads from the highest bidder at the top of shopping search results.

Since we were determined to provide a superior shopping experience for heavily visual categories where users don't necessarily refer to products of interest by their precise names or model numbers, but instead frequently describe them by their looks—as is often the case with fashion items—we were presented with an additional set of challenges.

We want to provide the most natural experience, one that closely mimics the way humans search, browse and evaluate items in those visual-heavy categories. Rather than rely solely on available metadata and product descriptions, we incorporate pure visual similarity to help us find the same or similar items for sale on different websites.

How do we tell then, in the absence of precise model numbers, that items for sale at two different websites are the same when the names don’t necessarily match? How do we help our users find similar items?

To help answer these questions with high quality we went as far as building out a separate cluster bed with custom machine learning algorithms doing state of the art image analysis to enable nearest-neighbor search based on visual similarity. It’s the results of this work that populates available shopping sources for a given product in Image Insights page shown earlier.

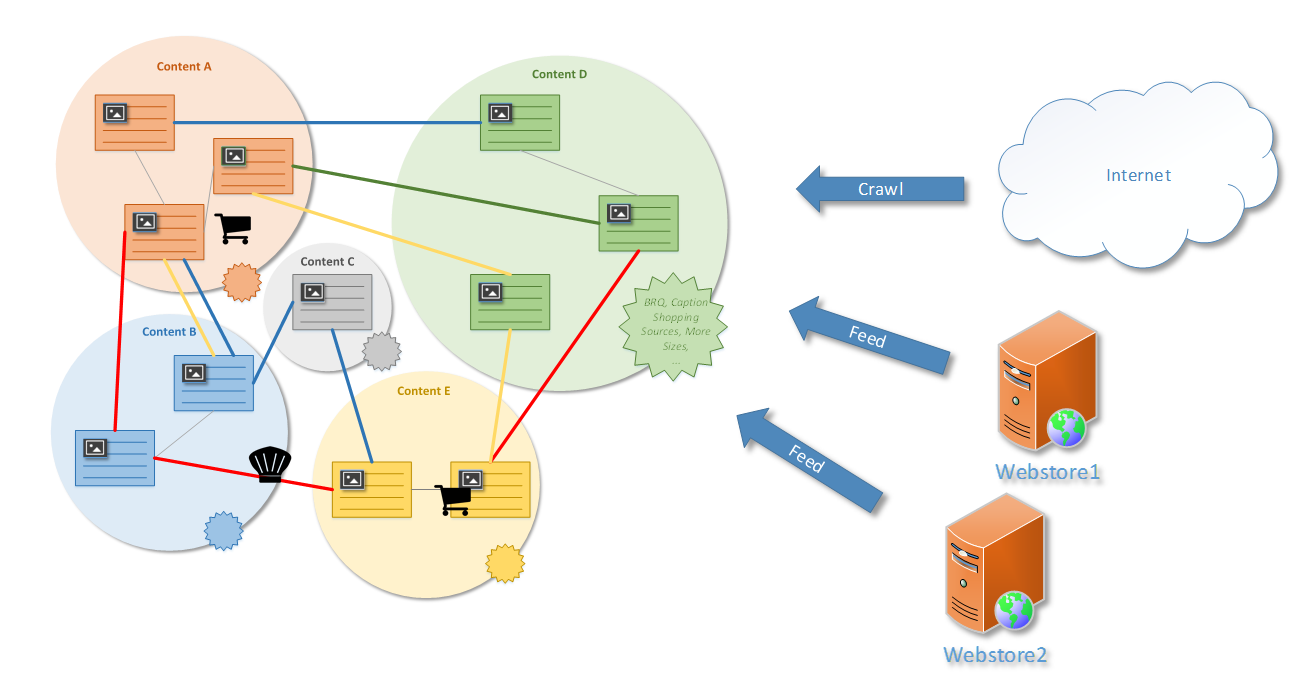

At this point we were able to build content clusters: groups of nearly identical images, corresponding pages and assign those to query intents that they satisfy (see previous blog post here for more detail on construction of these).

The approach, while attractive and having great potential, also reminded us that tasks humans take for granted aren’t easy even for the most advanced image-recognition algorithms. We are already aware of cases that still need work, such as the "tshirt case", which we are studying.

The system correctly understands that other t-shirts with writing or symbols on them may be of interest, but is still missing the fact that the subject of the text also matters. Another area of development is improving timeliness of offers – expect to hear about improvements in this area soon.

In general refining relevance and quality for these scenarios is an ongoing process. Should you run into any scenarios not working to your satisfaction please do let us know via Bing Feedback which we’re actively monitoring.

With the image processing machinery in place we set out in search of best shopping pages we could find anywhere on the internet for the variety of products our users might be interested in. As a result, we are now able to map hundreds of millions shopping offers from thousands of sites, which is likely one of the most comprehensive, diverse and relevance-driven, as opposed to ad-driven, shopping catalogs on the net.

Connecting Query Intent to Content Intent

At that point we were ready to start work on tuning our rankers to ensure we surface most attractive purchasable content for shopping queries while preserving the relevance of search results.

As always is the case with an undertaking of this complexity, we also built multiple types of metrics into all tiers of the system. This allows us to continually monitor quality of our shopping page discovery and classification algorithms, quality of shopping page selection, ranking, freshness and many other aspects of the system affecting user experience. The data produced by these metrics is continually evaluated and used to improve corresponding algorithms.

With that, we are now able to complete the picture we started sketching in our previous blog post where we talked about how we construct the Image graph offline where each content cluster represents aggregate knowledge about specific set of (near) duplicate images along with key query intents (pure image search, shopping, recipe) that each cluster can meet.

Recipes

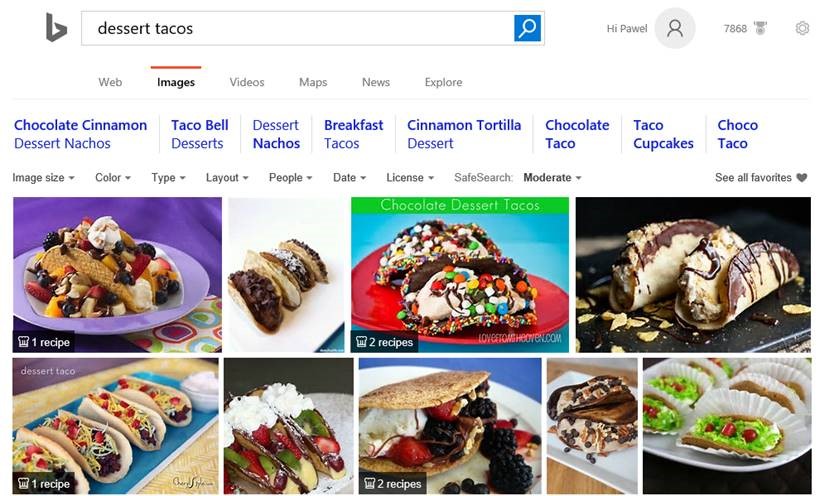

Another segment that received special treatment recently is recipes. Using a similar technology as with Shopping we have enabled users to not only find images of many delicious dishes they love but also corresponding recipes, thus allowing you to achieve a completely different level of 'content consumption.'

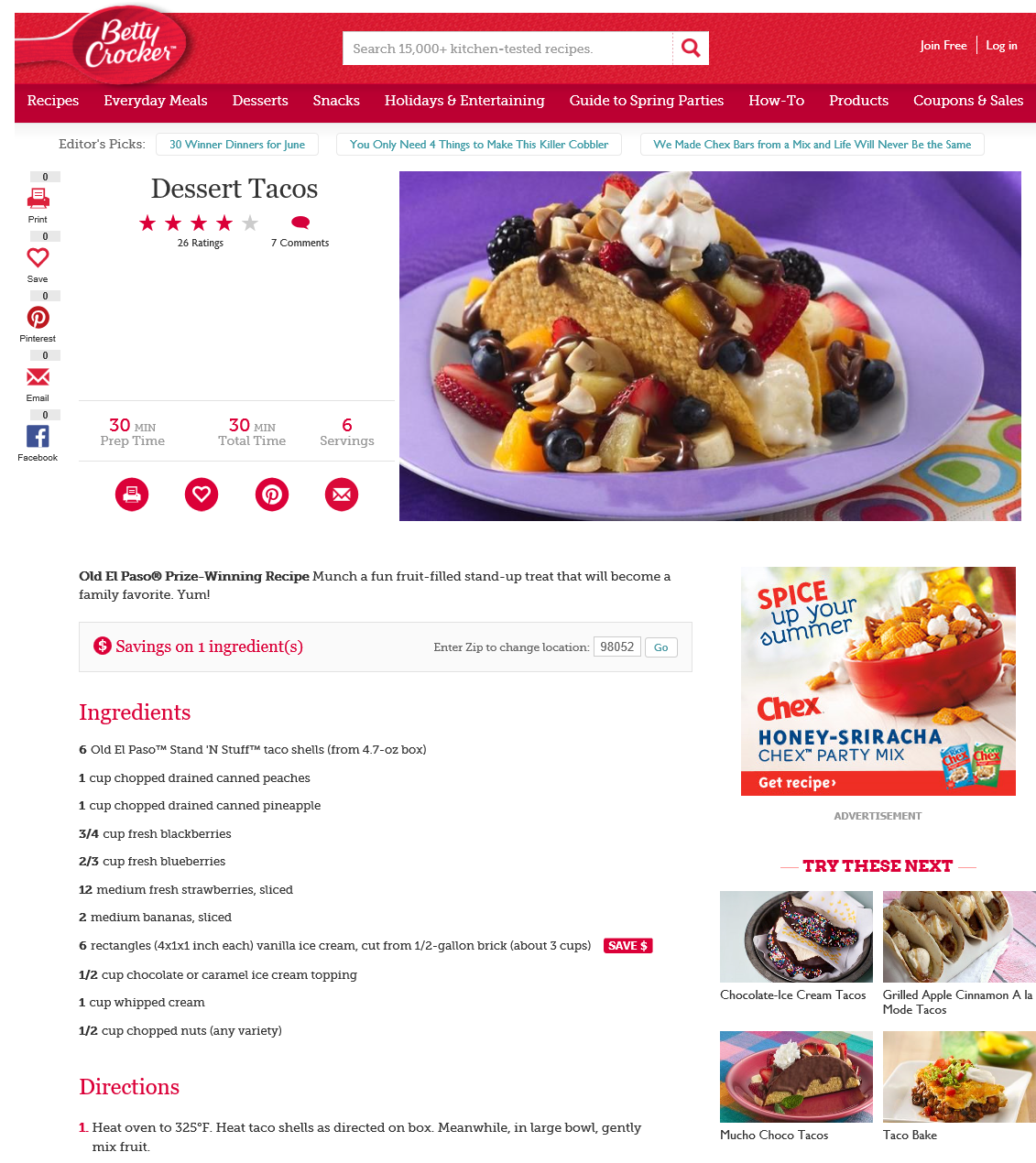

To try it out enter a query: "dessert tacos". Just like with shopping queries you'll see some results adorned with a little chef's hat icon:

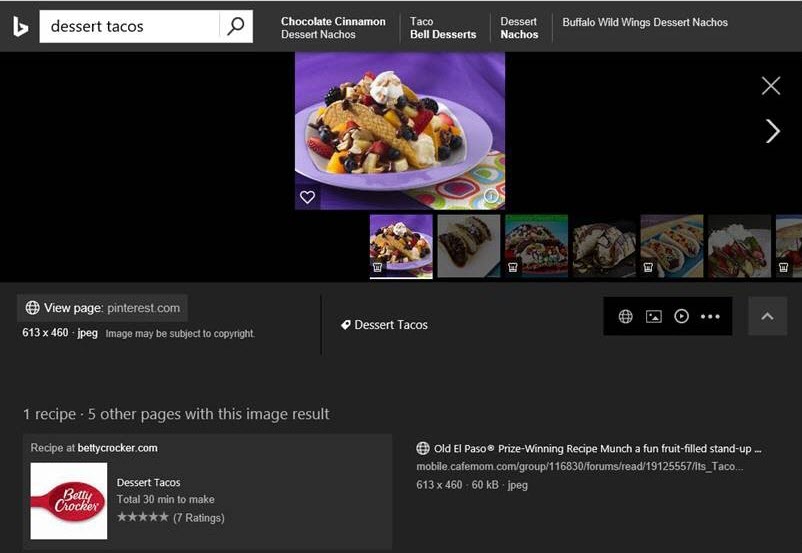

The recipe badge indicates that on click-through you will find a recipe for the dish shown in that particular image. Click on the result and scroll down in insights view (or just click on the link embedded in recipe badge itself), and a recipes section appears showing links to pages with recipe for this exact dish:

As you can see above the total cooking/prep time is conveniently extracted and shown on each page's tile to give you an idea of the complexity of a specific recipe. Finally, selecting one of the links takes you to the page with the recipe:

Wrap up

In our //build announcement as part of Microsoft Cognitive Services we opened up all this knowledge to every developer out there (Image Search API Reference). This allows you to use it, build it into your applications and websites, to create superior experiences on top of what we have.

Overall, since we launched the experience we're seeing hundreds of millions of queries hitting our shopping experience. That number is steadily increasing as well as the number of participating merchants, which tells us that users appreciate the new functionality. In fact, we've been seeing more than 40 percent growth since launch earlier this year. We are deeply grateful this kind of engagement and it only motivates us even more to continue to improve this area of Bing.

Now those dessert tacos have made us all really hungry, but we are nowhere near done expanding and enriching Bing Multimedia experiences. This provides us with a solid foundation to build on and sets a stage for the next round of improvements which will soon be heading your way, and which we'll be sharing with you on this blog. Keep checking Bing with your shopping and recipe queries and let us know what you think via Bing Listens or regular Bing Feedback found within Bing.