Since then, transformers have become increasingly popular across Bing and now power new capabilities such as intelligent summarization and expanding Question-Answering to 100+ languages. For example, by using domain adapted transformers, Bing incorporates signals such as the page’s language, location, and a higher proportion of the web page’s content to provide more relevant, fresh, and contextualized search experiences. Now when a user in Japan searches “精神病院赤羽”(mental health clinic Akabane), Bing uses the user’s location and language to surface relevant clinic options in Akabane.

Relative to the initial 3-layer transformer integrated into Bing, the latest transformers are much more complex – each model has many more layers and needs to support much longer input sequence lengths. To ensure Bing will continue to deliver the fast, responsive, and relevant search experience our users expect, we’ve invested heavily in transformer inference optimization across both hardware and software to mitigate the performance and cost impact of higher model complexity.

Optimizing transformers using inference-focused NVIDIA T4 GPUs in Azure

Using our prior experience optimizing transformers using NCsv3 series Azure VMs with NVIDIA V100 Tensor Core GPUs, we focused on optimizing transformers using NCasT4v3 Azure VMs given the inference-focused nature of NVIDIA T4 Tensor Core GPUs with low precision support like INT8. We optimized model inference along three main dimensions: Operator fusion, INT8 model quantization, and maximizing inference serving throughput.Custom fused multi-head attention kernel

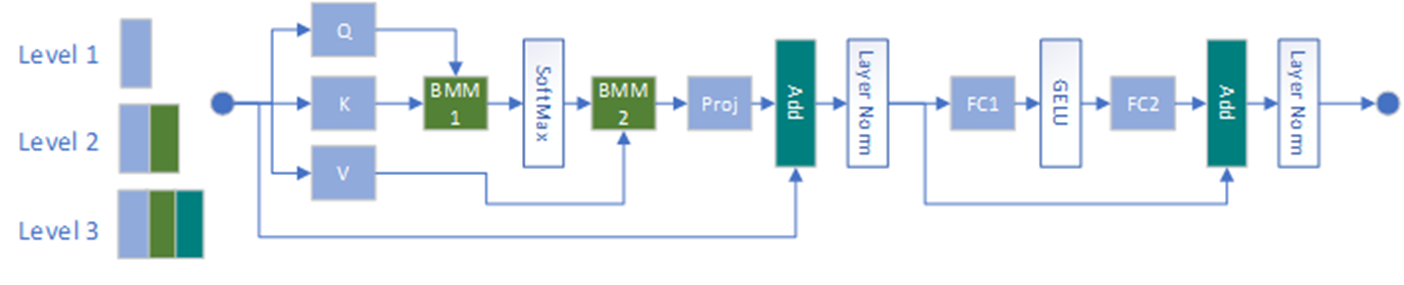

Operator fusion is an optimization technique to accelerate transformer performance by fusing multiple discrete transformer operations into a single kernel. This minimizes the overhead from memory copy and data transfer across different kernels to improve inference performance.In close collaboration with NVIDIA, we used a custom fused multi-head attention kernel that combined batch matrix multiplication, softmax, and other operators and adapted it for each model’s specific transformer parameters such as hidden dimension and sequence length. The kernel was also optimized to take advantage of the new INT8 Tensor Core support available on NVIDIA T4 GPUs.

Applying INT8 quantization

Another approach to improve model inference speed is to decrease the overall amount of computation through model compression via quantization. To leverage NVIDIA’s Tensor Core GPU mixed-precision support, we applied post-training quantization to each model’s FP32 weights using two steps: 1) Generate and apply the INT8 scaling factors and 2) select the operators to quantize in the graph based on the scenario’s precision and latency thresholds. By using only post-training quantization, we were able to avoid quantization aware finetuning and deploy models to production faster.We leveraged NVIDIA TensorRT’s INT-8 quantization pipeline to first dump each model’s FP32 weights, identify the weight and activation quantization scaling factors based on calibration data, and store the quantized weights. We then evaluated multiple combinations of quantized kernels (levels). In Level 1 quantization, only the projection, embedding, and feed forward layers were quantized to INT8 which improved model latency by 20.6%. For Level 2, we added INT8 batch matrix multiplication kernels which improved model latency by 30.5% and for level 3 we quantized the additional layers, providing a 35.4% latency improvement at P95. Each quantization level has varying impacts to model precision so depending on the specific use case and model precision requirements, we select different levels of INT8 quantization to apply.

Maximizing inference serving throughput

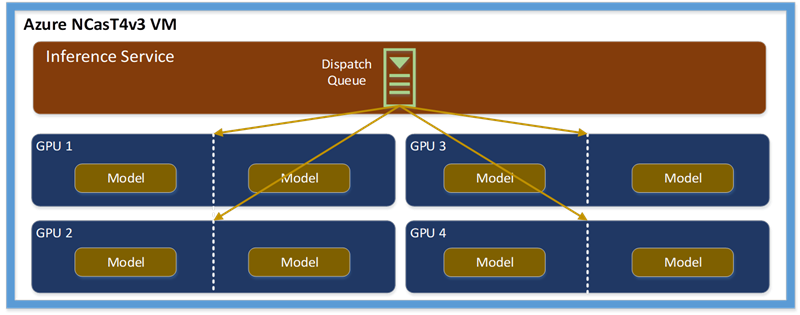

These transformers are hosted in Bing’s deep learning inference service (DLIS) which is hosted on heterogeneous hardware fleet spanning across GPU and CPU machines. Each NCasT4v3 Azure VM contains four NVIDIA T4 GPUs per VM and can host four independent model instances. Each model instance is associated with two CUDA streams to further saturate the GPU utilization which means that in total each GPU server could handle eight concurrent inference requests. To minimize the service overhead, each server runs a single DLIS service instance. The service instance has a per-model dispatch queue to decide which model instance to release the request, and the dynamic batch size based on the number of the requests in the queue as shown in below diagram.

While we successfully optimized tensor computation on GPU, we observed that tokenization emerged as a system bottleneck due to concurrent inference requests and longer input sequences. By offloading the tokenization pre-processing to CPU-dense servers in combination with accelerated networking available in NCasT4v3 VMs, we reduced the end-to-end latency for each inference by 2.5 milliseconds. This is quite significant given that each model inference is typically tuned below 10 milliseconds per inference to ensure fast and responsive Bing search results.

Serving complex transformer inference at web-search scale. We benchmarked a 6-layer transformer with max sequence length 256 and batch size 10 on NCasT4_v3 Azure VM using the above optimizations. By combining all of them, we observed nearly a 3x increase in throughput per NVIDIA T4 GPU which was critical to meet our stringent cost-to-serve and latency requirements.

| Benchmarks on T4 | Precision | Throughput | Model Latency (ms) |

| Baseline Model | FP16 | 1123 | 8.9 |

| Fused Kernel | FP16 | 2083 | 4.84 |

| Fused Kernel | INT8 (Level 1) | 2525 | 3.96 |

| Fused Kernel + two CUDA streams | INT8 (Level 1) | 3174 | 6.38 |

If you’re interested in exploring some of these optimizations, please see the following resources:

- NCasT4_v3 series Azure VMs are now generally available in Azure

- To explore applying INT8 quantization for NVIDIA GPUs, see NVIDIA’s BERT Inference with Nvidia TensorRT demo on GitHub for quantization examples